NVIDIA founder and CEO, Jensen Huang, announced an updated GH200 Grace Hopper Superchip, the NVIDIA AI Workbench, and an updated NVIDIA Omniverse with generative AI at a wide-ranging SIGGRAPH keynote.

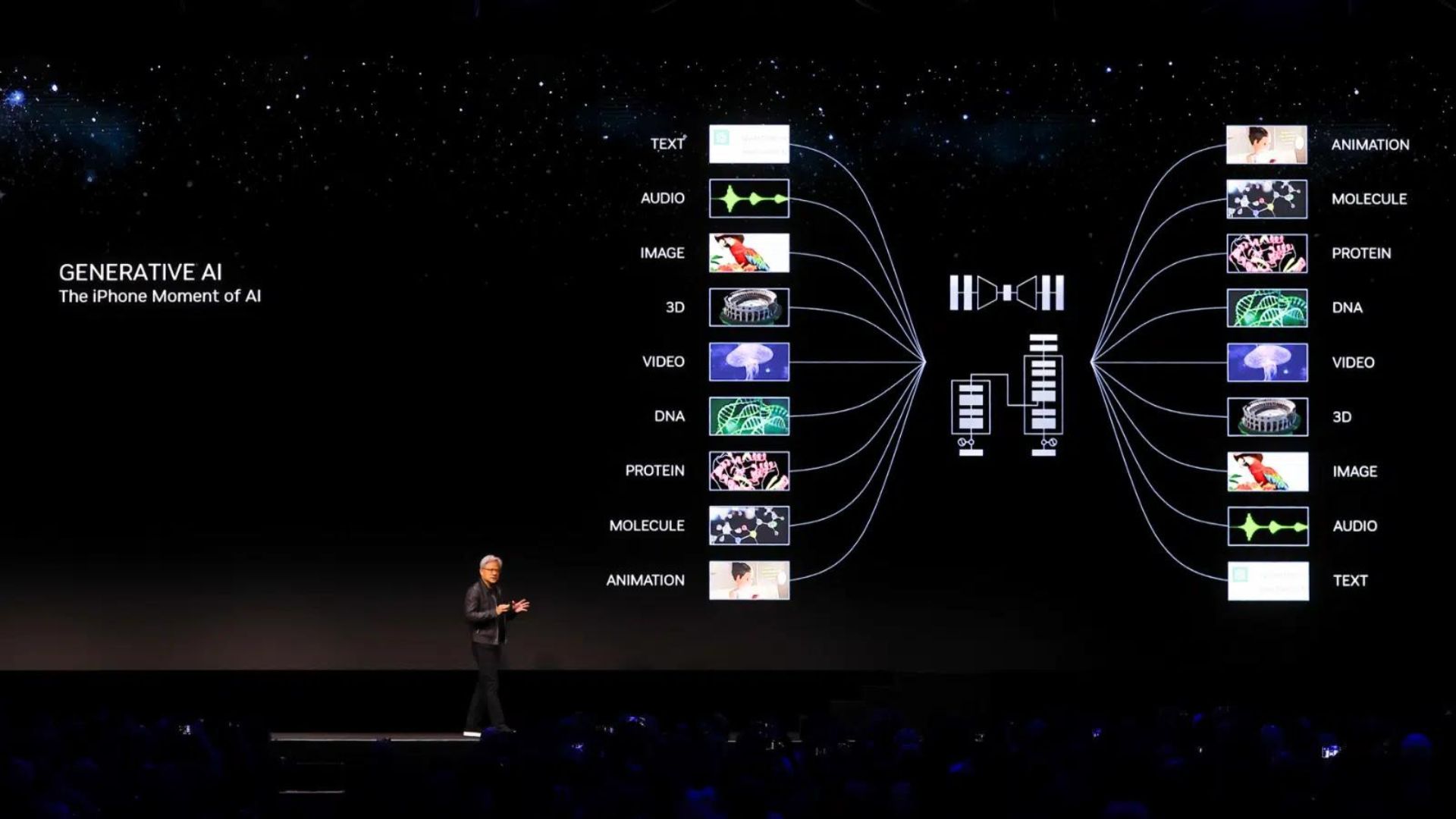

NVIDIA founder and CEO, Jensen Huan, put in a fairly bravura performance at this year’s SIGGRAPH keynote, with much of the content of the 90 or so minutes he spoke concentrating on generative AI

You can have a look at the full keynote at the bottom of the page, but for those of you just looking for the highlights, these are the main points.

A new Grace Hopper superchip

The Grace Hopper Superchip, the NVIDIA GH200 to give it its full name, which combines a 72-core Grace CPU with a Hopper GPU, went into full production in May. Three months is, of course, an age when it comes to anything involving AI, and so one of Huang’s first reveals was that an additional version with HBM3e memory will be commercially available next month.

Beyond that, there’s a whole new next gen version due next year as well, which will allow users to connect multiple GPUs for exceptional performance and easily scalable server design. NVIDIA reckons that the dual configuration of a single server with 144 Arm Neoverse cores, eight petaflops of AI performance, and 282GB of HBM3e memory delivers up to 3.5x more memory capacity and 3x more bandwidth than the current generation. This is a lot.

A new Omniverse

A major new release of Omniverse brings generative AI and NVIDIA’s new commitment to OpenUSD together for what Huang refers to as Industrial Digitalization

Updates to the Omniverse platform include:

- Advancements to Omniverse Kit — the engine for developing native OpenUSD applications and extensions — as well as to the NVIDIA Omniverse Audio2Face foundation app and spatial-computing capabilities.

- Cesium, Convai, Move AI, SideFX Houdini and Wonder Dynamics are now connected to Omniverse via OpenUSD.

- Expanding their collaboration across Adobe Substance 3D, generative AI and OpenUSD initiatives, Adobe and NVIDIA revealed plans to make Adobe Firefly — Adobe’s family of creative generative AI models — available as APIs in Omniverse.

- Omniverse users can now build content, experiences and applications that are compatible with other OpenUSD-based spatial computing platforms such as ARKit and RealityKit.

Huang also announced a broad range of frameworks, resources and services for developers and companies to accelerate the adoption of Universal Scene Description, including contributions such as geospatial data models, metrics assembly and simulation-ready, or SimReady, specifications for OpenUSD. Frankly, the momentum behind the specification is starting to look unstoppable.

Huang also announced four new Omniverse Cloud APIs built by NVIDIA for developers to more seamlessly implement and deploy OpenUSD pipelines and applications.

ChatUSD — Assisting developers and artists working with OpenUSD data and scenes, ChatUSD is a large language model (LLM) agent for generating Python-USD code scripts from text and answering USD knowledge questions.

RunUSD — a cloud API that translates OpenUSD files into fully path-traced rendered images by checking compatibility of the uploaded files against versions of OpenUSD releases, and generating renders with Omniverse Cloud.

DeepSearch — an LLM agent enabling fast semantic search through massive databases of untagged assets.

USD-GDN Publisher — a one-click service that enables enterprises and software makers to publish high-fidelity, OpenUSD-based experiences to the Omniverse Cloud Graphics Delivery Network (GDN) from an Omniverse-based application such as USD Composer, as well as stream in real time to web browsers and mobile devices.

NVIDIA AI Workbench

Huang bills the NVIDIA AI Workbench as a unified, easy-to-use toolkit to quickly create, test and fine-tune generative AI models on a PC or workstation — then scale them up to operate in virtually any data center, or public cloud

The idea is that the Workbench removes the complexity of getting started with an enterprise AI project, and allows developers to easily fine-tune models from popular repositories. Hundreds of thousands of pretrained models are already available on the rapidly mushrooming AI market, and customizing them with the many open-source tools available can be challenging and time consuming to say the least.

The AI Workbench will provide a shortcut and leading AI infrastructure providers — the likes of Dell Technologies, Hewlett Packard Enterprise, HP Inc., Lambda, Lenovo and Supermicro — are onboard, as is startup Hugging Face, which already has 2 million users. All of this will put generative AI supercomputing at the fingertips of millions of developers building large language models and other advanced AI applications, reckons Huang. We await the results of that with what can only be described as a small amount of trepidation.

And that’s not all...

As we said, the speech was wide-ranging to say the least.

Huang also said that NVIDIA and global workstation manufacturers are announcing powerful new RTX workstations from the likes of BOXX, Dell Technologies, HP and Lenovo, based on NVIDIA RTX 6000 Ada Generation GPUs and incorporating NVIDIA AI Enterprise and NVIDIA Omniverse Enterprise software.

Separately, NVIDIA released three new desktop workstation Ada Generation GPUs — the NVIDIA RTX 5000, RTX 4500 and RTX 4000 — to deliver the latest AI, graphics and real-time rendering technology to professionals worldwide.

And, at the show’s Real Time Live Event, NVIDIA researchers have also been demonstrating a generative AI workflow that helps artists rapidly create and iterate on materials for 3D scenes, using text or image prompts to generate custom textured materials faster and with finer creative control than before.

Have a look at all of it below.

Tags: Technology

Comments