Until now, one of the main stumbling blocks for truly great AI image generation was user control. The ControlNet assistant for Stable Diffusion solves that and hands the power back to the artist.

If you've tried any of the new AI image generators such as Stable Diffusion, the one thing that strikes you is the lack of overall control that you have. Sure, we've seen some striking examples of what is possible, but they have usually been the result of hundreds of attempts at image generation.

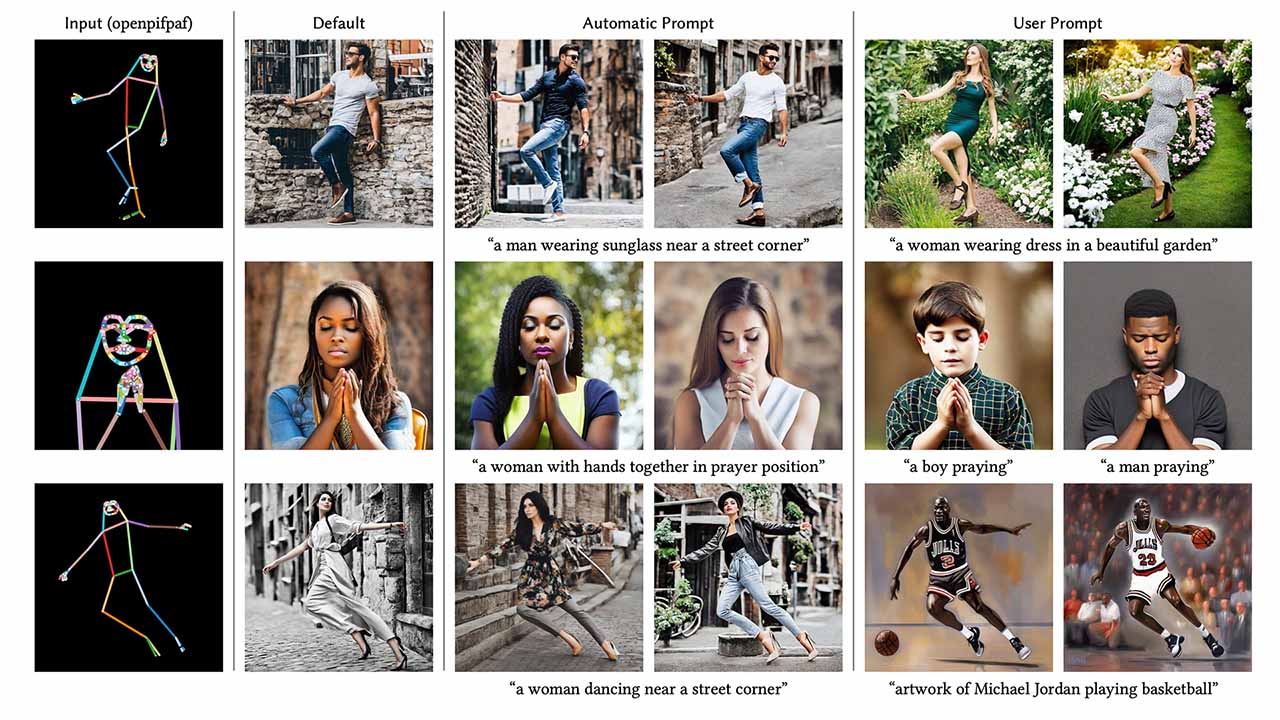

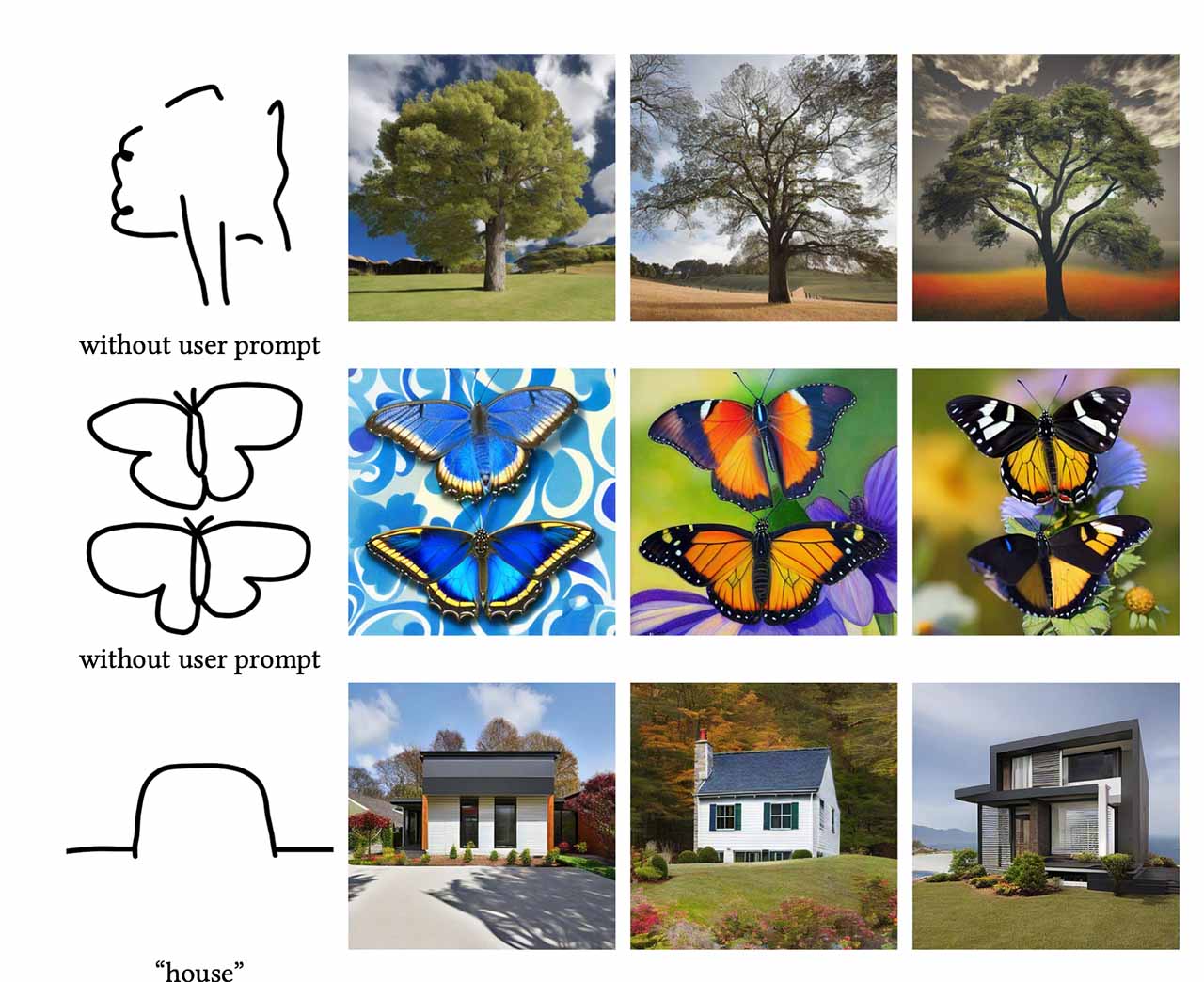

Now, there's a new assistant on the block, that integrates with Stable Diffusion to give users huge amounts of control over the final image. Called ControlNet, the new system gives users the choice over several different generation models, which range from giving the AI a basic sketch or a guide photograph, to a stick man style drawing to tell it how to pose a person in the picture.

Creating an image from a simple 'scribble'. Image: Lvmin Zhang and Maneesh Agrawala Stanford University

Additionally, you can now guide the AI as to which parts of a generated image you'd like to keep, and how to change some aspects of it. One of the side effects of all this is that odd artefacts, such as weird looking hands or fingers, have been almost eliminated overnight. In fact, so powerful is the new system, that some users have been using it to modify the lighting in 2D images, creating resultant animations that cast shadows and shapes as if they had been filmed on location.

Taking back control with ControlNet

ControlNet is the result of research by Lvmin Zhang and Maneesh Agrawala at Stanford University. What ControlNet does, is it allows conditional inputs, such as edge maps and segmentation maps amongst others to assist the AI in creating the required image. It also means that it enables the AI to create useful imagery even if it has only been trained on a very small dataset.

It's all still very much in the experimental stages, and requires a bit of technical knowledge to get it running on your own PC. However, there are some online demos available to help you try out the new methods.

For the input sequence I use a short animation made from a photogrammetry 3d scan I did a few years back of my parents living room in India.

— Bilawal Sidhu (@bilawalsidhu) February 20, 2023

- Top: Output generated with ControlNet + EbSynth

- Bottom: Input video sequence from my 3D scan pic.twitter.com/h0VihtKGW8

A powerful example of ControlNet at work

As I mentioned, there are several ways that you can use ControlNet to get the results you're after from Stable Diffusion. The model you use will depend on what you are after. For example, some models are better suited for human portrait generation, whilst others are better for landscapes or objects. The online demo mentioned above can give you a taster of what is possible, but why far the most reliable and powerful way is to install the system on your own computer.

Doing this requires knowledge of command line input, and currently it isn't for the non-tech nerds, but the results that are being achieved are nothing short of incredible. Exerting fine control over how AI images are generated was always one of the technology's primary stumbling blocks, since outputs were so randomised and relied on luck of the draw. ControlNet, and subsequent similar developments are going to change the landscape, offering a degree of maturity for the technology that has been hugely needed. Users are combining ControlNet with other systems such as Dreambooth, which can use multiple image inputs, and 3D model posing to achieve even more incredible results.

Relighting 2D images using Dreambooth in conjunction with ControlNet.

The control over lighting in 2D images is of particular note. For video producers this is a development that could mean that the idea of relighting in post production becomes a reality. The dream of being able to take that scene that had to be shot in less than ideal external conditions on a grey, overcast day, and replace it with golden hour is now a highly realistic possibility. In fact I'd go so far as to say it is now a certainty.

The question of authenticity will always be on people's minds when discussing technology like this. But, in the realms of fictional video and film production it is all perfectly acceptable. Whilst it's easy to focus on AI's use for forgery and fakery and criminal acts, we mustn't lose sight of the benefits that it stands to bring to the table.

Using 3D model posing for image generation.

In these terms 2023 has gotten off to a great start, and whilst consumer interest in AI photography apps may have taken a hit recently, the technology itself continues to develop at a pace. It's only February and we've now see the first major step-change in allowing ultimate control over AI image generation. I can't wait to see what the rest of the year brings.

Read the full paper, including more examples of the system in action.

Tags: Technology AI

Comments