The new sensor can capture information at up to 24,000fps and has enormous implications for future applications such as self-driving automobiles.

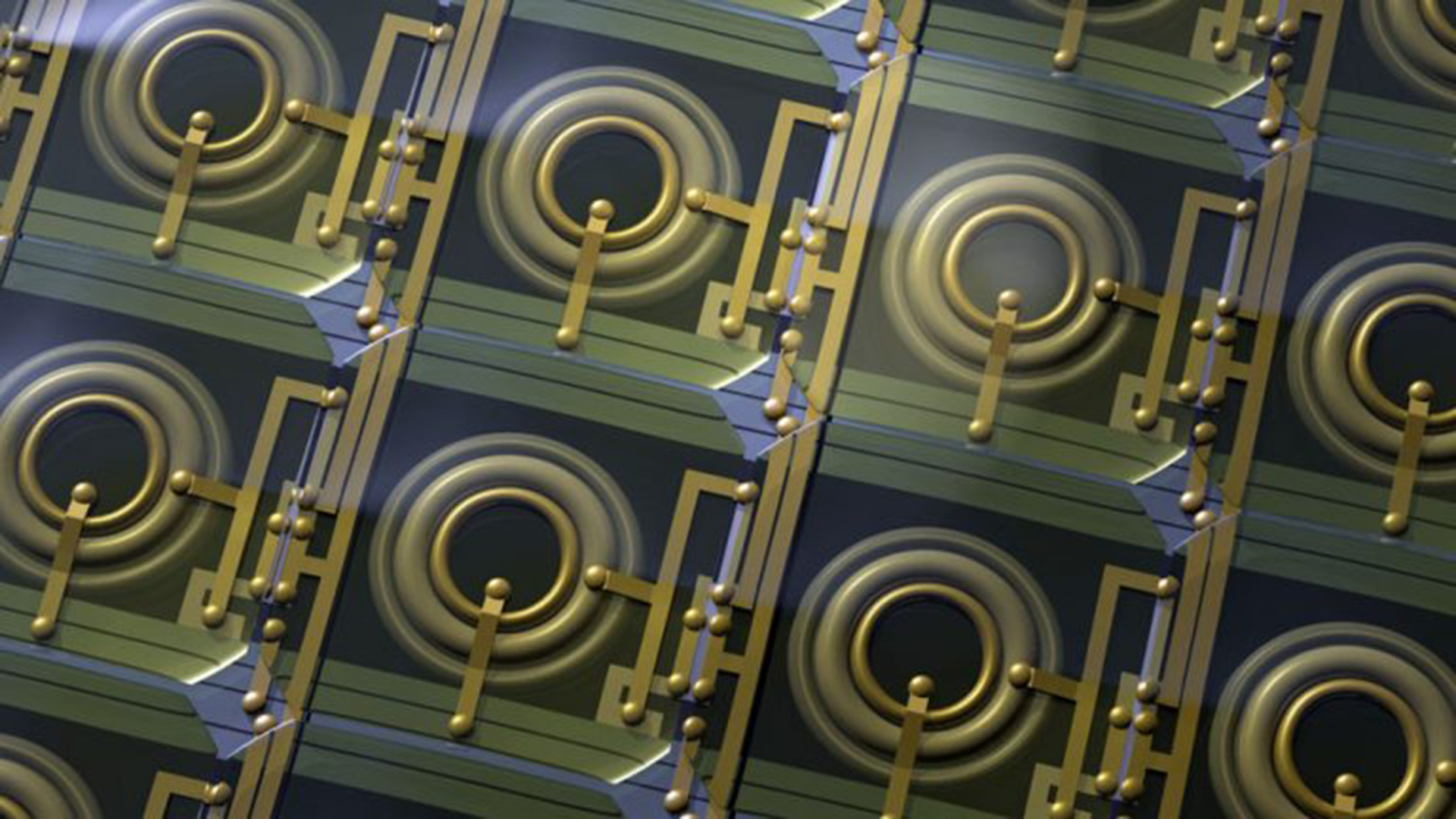

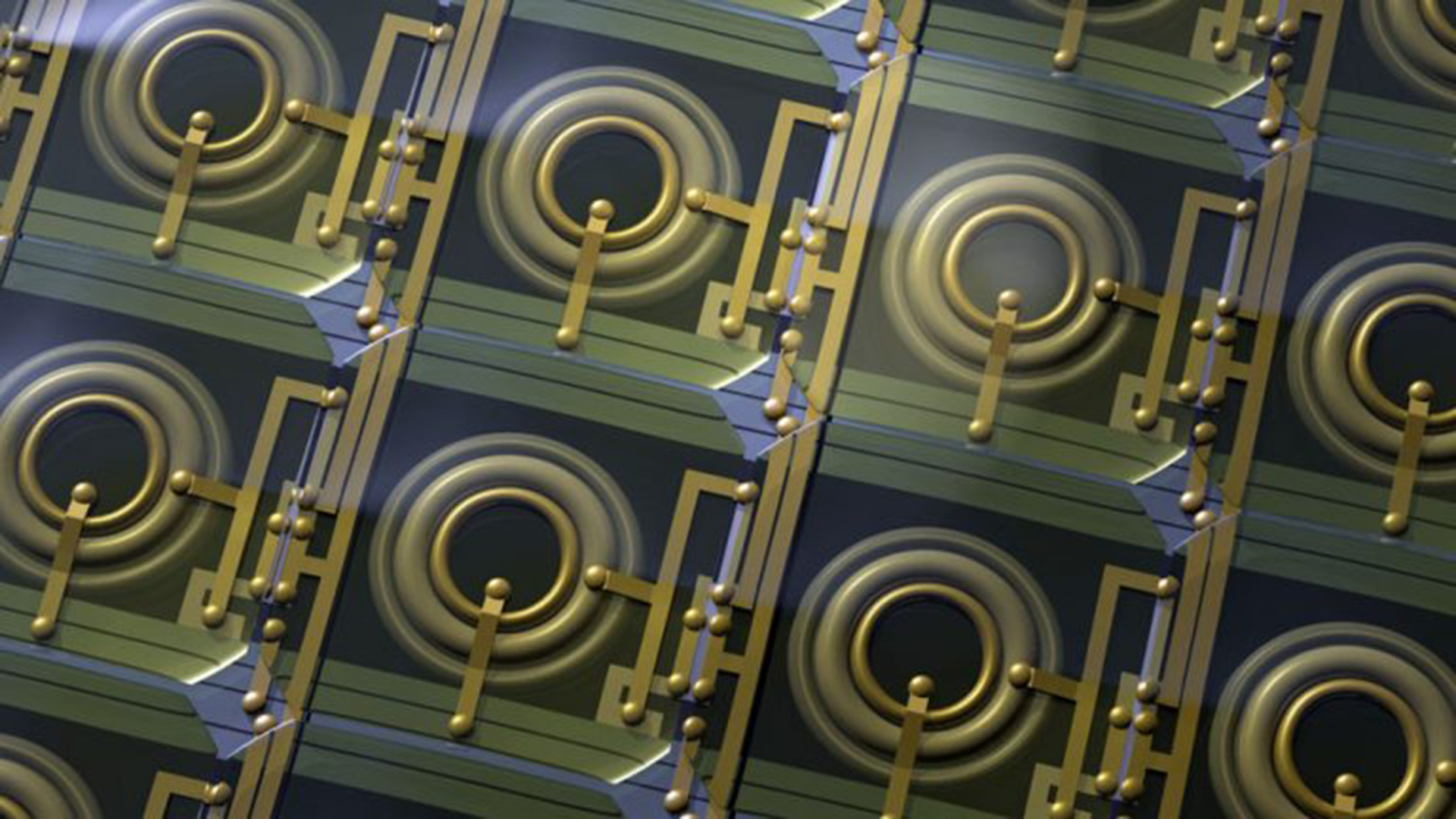

SPAD sensor. Image: Advanced Quantum Architecture Laboratory

A SPAD sensor is not an image sensor in the normal sense of the word. The acronym stands for Single Photon Avalanche Diode. One of these diodes is placed within each pixel, and when hit by an incoming photon of light, it can convert this into an avalanche of electronics to create a single large electrical pulse signal.

What this means is that the sensor can have enormous sensitivity as well as allowing for accurate distance measurements. The applications of SPAD sensors are far and wide. They are already used in smartphones as proximity sensors, and in medicine they have been used to detect radiation to help discover cancer its early stages. But the applications don’t end there. Not only can they be used to advance autonomous vehicles, but they have applications in augmented reality, VR, optical communications, and quantum computing amongst many other things.

New advancements

The trouble is that until now SPAD sensors have been limited in what they can achieve with higher pixel counts. This new sensor uses a method called photon counting to help increase the resolution to 1 megapixel. It also employs a global shutter so that it can have simultaneous control of exposure for each individual pixel. Apparently exposure time can be shortened all the way down to 3.8 nanoseconds, with a maximum frame rate of 24,000fps with 1-bit output.

This enables the analysis of events that might not be possible otherwise such as lightening strikes, impact damage and so on.

The new sensor is capable of a high time resolution of 100 picoseconds, which enables it to determine the precise timing at which a photon reaches a pixel. This means that the sensor is capable of Time of Flight distance measurement. This is the method for determining the distance between the sensor and another object. Distance is measured upon how quickly light that has been emitted from the light source reflects off the target object and returns to the sensor.

Whilst the development is not directly related to the usual camera technology that we usually feature on RedShark, it does have some overlap. Depth sensing is an area that will become increasingly important as both computational photography and video advance. Apple’s iPhone for example utilises Time of Flight sensor technology in its FaceID system and combines it with other sensor technologies to make it as effective as it is.

So while this development may not immediately affect the video industry, you can bet that the technology will find its way into our lives in combination with other developments in future devices and cameras.

The new sensor and its development is related to this one by the Advanced Quantum Architecture Laboratory, the results from which were announced earlier this year. In this case researchers used the sensor in a camera to accurately depict scenes behind a partially transparent window, as well as to produce conventional images with unprecedented dynamic range.

For the full technical breakdown of the sensor's technology you can read this paper from the Swiss Federal Institute of Technology.

Comments