A new system can create digital human extras as well as being able to create a playable game from scratch without programmers.

Image: Tel Aviv University

New machine learning techniques have been developed to insert photorealist people and characters into photos and videogames with potential for slashing the time and cost on VFX creation of digital extras. Another AI development points the way for entire games to be created without an army of human coders.

The separate breakthroughs have been made in the past few weeks by researchers at Facebook, Electronic Arts and Nvidia.

Image generation has progressed rapidly in recent years due to the advent of (generative adversarial networks (GANs), as well as the introduction of sophisticated training methods. However, the generation is either done while giving the algorithm an “artistic freedom” to generate attractive images, or while specifying concrete constraints such as an approximate drawing, or desired keypoints.

Other solutions involve using a set of semantic specifications or using free text yet these have yet to generate high-fidelity human images. What seems to be missing is the middle ground of the two, specifically, the ability to automatically generate a human figure that fits contextually into an existing scene.

That’s what Facebook and researchers at Tel Aviv University claim to have cracked.

The creation of artificial humans

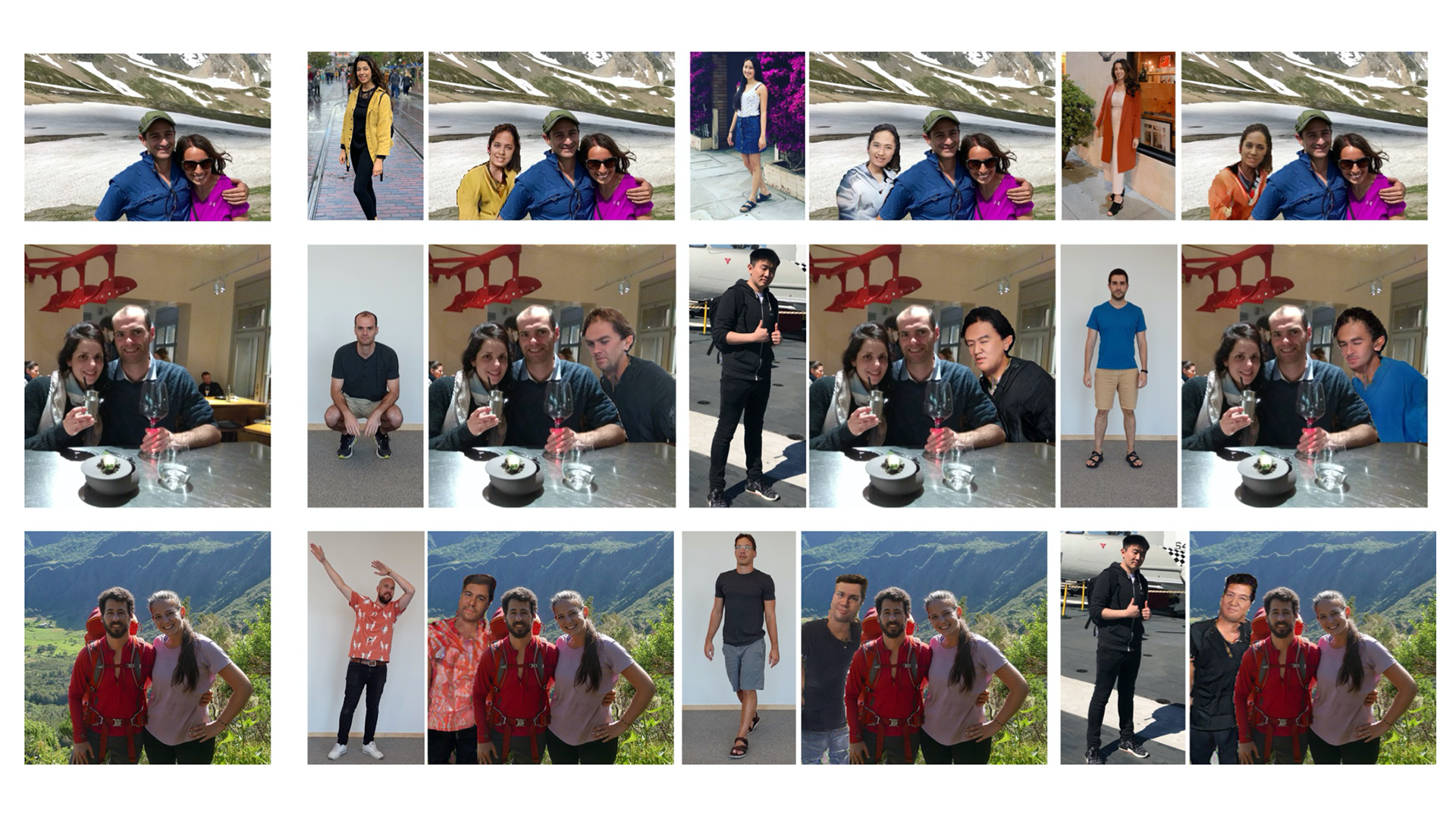

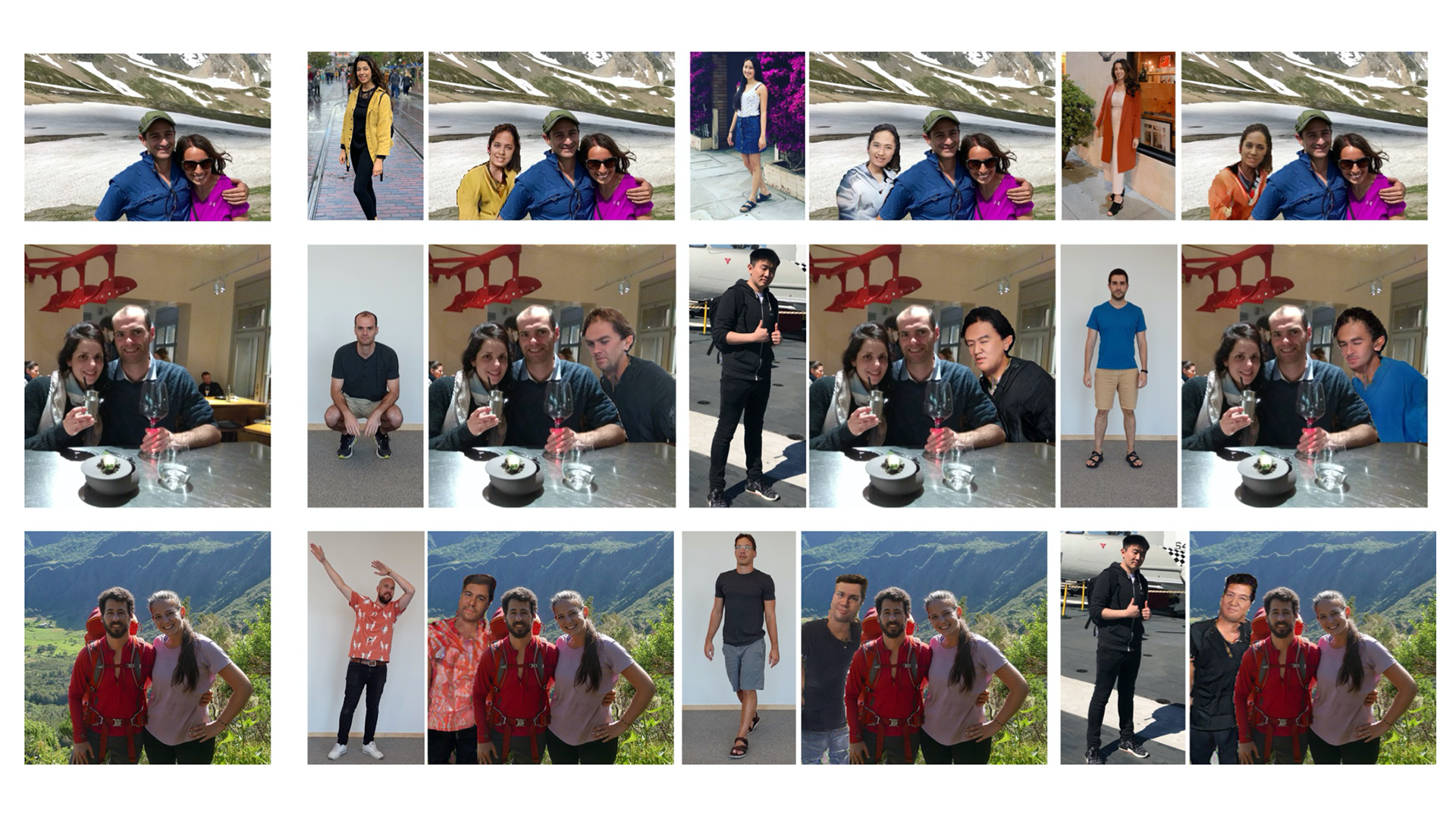

As outlined in a new paper, after training an AI on more than 20,000 sample photographs from an open-source gallery, the method involved three networks or sequences: The first generates the pose of the novel person in the existing image, based on contextual cues about other people in the image. The second network renders the pixels of the new person, as well as a blending mask. A third network refines the generated face in order to match those of the target person.

Unlike other applications, such as face swapping, the appearance of the novel person here is controlled by the face, several clothing items, and hair.

The researchers claim to have demonstrated that their technique can create poses that are indistinguishable from real ones, despite the need to take into account all the social interactions in the scene.

They acknowledge that when CG humans are inserted into an existing ‘real’ image the results can stand out like a sore thumb.

But in tests, during which volunteers were asked to see if they could find the artificially-added people in group shots, they only managed to spot the ‘fakes’ between 28 per cent and 47 per cent of the time.

“Our experiments present convincing high-resolution outputs,” the research paper claims. “As far as we can ascertain, [this AI] the first to generate a human figure in the context of the other persons in the image. [It] provides a state-of-the-art solution for the pose transfer task, and the three networks combined are able to provide convincing ‘wish you were here’ results, in which a target person is added to an existing photograph.”

Saving costs

While inserting folks into frames might not seem like the most practical application of AI, it could be a boon for creative industries where photo and film reshoots tend to be costly. Venture Beat suggests that, using this AI system, a photographer could digitally insert an actor without having to spend hours achieving the right effect in image editing software.

Meanwhile, a team from EA and the University of British Columbia in Vancouver is using a technique called reinforcement learning, which is loosely inspired by the way animals learn in response to positive and negative feedback, to automatically animate humanoid characters.

“The results are very, very promising,” explained Fabio Zinno, a senior software engineer at EA to Wired.

AI generated computer games

Traditionally, characters in videogames and their actions are crafted manually. Sports games, such as FIFA, make use of motion capture, a technique often uses markers on a real person’s face or body, to render more lifelike actions in CG humans. But the possibilities are limited by the actions that have been recorded, and code still needs to be written to animate the character.

By automating the animation process, as well as other elements of game design and development, AI could save game companies millions of dollars while making games more realistic and efficient, so that a complex game can run on a smartphone, for example.

In work to be presented at next month’s (virtual) computer graphics conference, Siggraph, the researchers show that reinforcement learning can create a controllable football player that moves realistically without using conventional coding or animation.

To make the character, the team first trained a AI model to identify and reproduce statistical patterns in motion-capture data. Then they used reinforcement learning to train another model to reproduce realistic motion with a specific objective, such as running toward a ball in the game. Crucially, this produces animations not found in the original motion-capture data. In other words, the program learns how a soccer player moves, and can then animate the character jogging, sprinting, and shimmying by itself.

Even more astonishing, GANs now have the ability to generate whole videogames from scratch.

Recreating Pac-Man without coders

Trained on 50,000 episodes of Pac-Man, a new AI model created by NVIDIA with the University of Toronto and MIT can generate a fully functional version of the classic arcade game — without an underlying game engine. That means that even without understanding a game’s fundamental rules, AI can recreate the game with convincing results.

“We were blown away when we saw the results, in disbelief that AI could recreate the Pac-Man experience without a game engine,” said Koichiro Tsutsumi from Bandai Namco, which provided the data to train the GAN. “This research presents exciting possibilities to help game developers accelerate the creative process of developing new level layouts, characters and even games.”

Since the model can disentangle the background from the moving characters, it’s possible to recast the game to take place in an outdoor hedge maze, or swap out Pac-Man for your favourite emoji. Developers could use this capability to experiment with new character ideas or game themes.

“We could eventually have an AI that can learn to mimic the rules of driving, the laws of physics, just by watching videos and seeing agents take actions in an environment,” said Sanja Fidler, director of Nvidia’s research lab. “GameGAN is the first step toward that.”

Comments