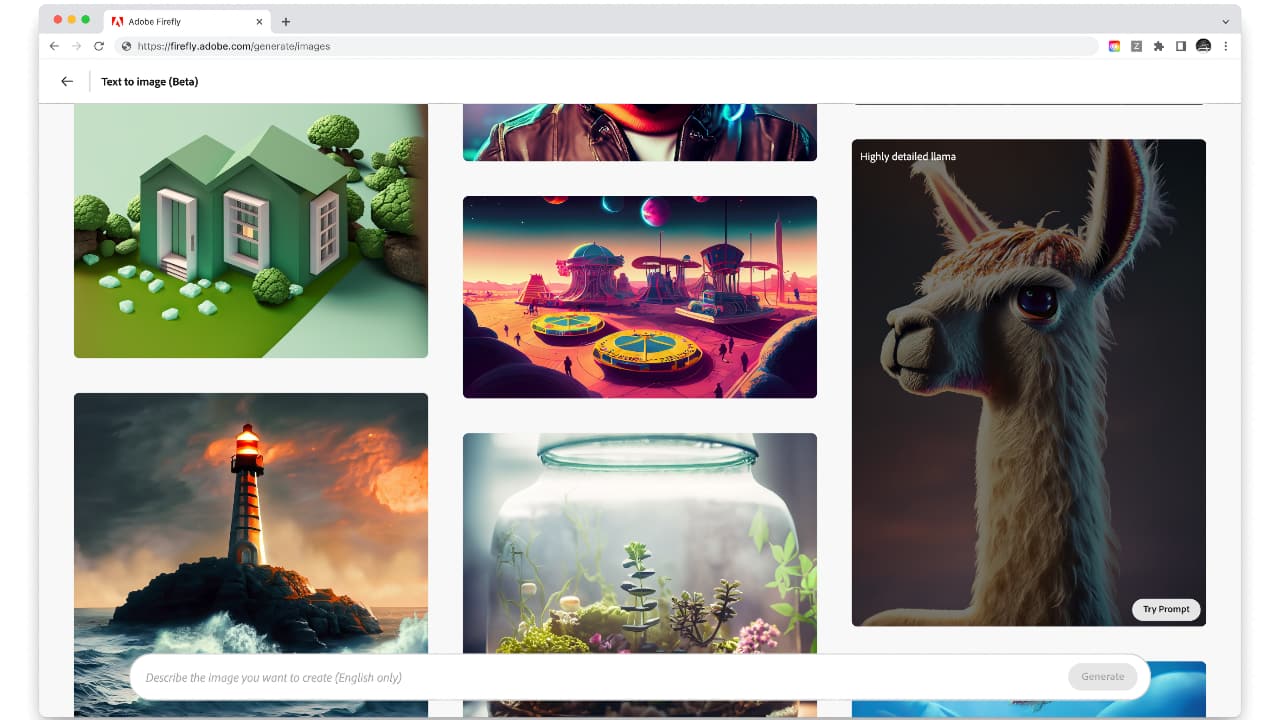

As one of the companies at the forefront of AI image manipulation in its tools over the past years, it’s no surprise to see Adobe enter the generative AI field with the distinctively positioned new Firefly.

One of the key things about Adobe’s new Adobe Firefly family of generative AI models, which it has announced for the first time today, is not so much what they do as how the company is positioning them. While referencing the fact that its first release will be similar in capability to OpenAI’s tools, it makes much of the fact that it has been built in-house and trained entirely ethically by using existing images in Adobe Stock, openly available images, and those which are out of copyright. The company says that this means it produces image outputs that are 100% safe for commercial use. And given the grey area of pretty much anything to do with a lot of AI at the moment, any reassurances along those lines can only be a good thing.

Of course, Adobe has plenty of previous when it comes to AI; over a decade of it in fact, much under the auspices of its Adobe Sensei program. Features such as Neural Filters in Photoshop, Content Aware Fill in After Effects, Attribution AI in Adobe Experience Platform, and Liquid Mode in Acrobat have all made significant contributions to digital artworks over the past few years. And Adobe is now very much highlighting the collaborative powers of AI as its shifting its focus into the generative space

“Adobe is leveraging generative AI to ease this burden with solutions for working faster, smarter and with greater convenience – including the ability for customers to train Firefly with their own collateral, generating content in their personal style or brand language,” it says. "Adobe’s intent is to build generative AI in a way that enables customers to monetize their talents, much like Adobe has done with Adobe Stock and Behance. Adobe is developing a compensation model for Adobe Stock contributors and will share details once Firefly is out of beta.”

Distinctive features

The beta is out right now. Firefly will eventually be made up of multiple models, tailored to serve customers with a wide array of skillsets and technical backgrounds, working across a variety of different use cases. This first model will focus on images and text effects and is designed specifically to generate content safe for commercial use. Adobe Stock’s hundreds of millions of professional-grade, licensed images should help ensure Firefly won’t generate content based on other people’s or brands’ IP. Future Firefly models, meanwhile, will leverage a variety of assets, technology and training data from Adobe and others. As other models are implemented, Adobe promises that it will continue to prioritise countering potential harmful bias.

One of the ways it is doing that is with the introduction of a “Do Not Train” tag for creators who do not want their content used in model training. The tag will remain associated with content wherever it is used, published or stored. The idea is that that’s not just in the Adobe ecosystem either, but through the company’s work with the Content Authenticity Initiative will become a universal tag blocking its use everywhere. Similarly, another tag will flag up what content has been generated by AI.

Another decent initiative comes via the idea of building all this so that customers can monetize their talents, in the same way the company has done with Adobe Stock and Behance. Details are hazy but Adobe confirms it is developing a compensation model for Adobe Stock contributors using Firefly and will share details once it is out of beta.

The training ability is interesting, as well. Extending Firefly training so that users can specify their own creative collateral as training inputs will give them a tool that is more in tune with their their own style or brand language and help to short-circuit what can still be a lengthy process of trial and error in many cases.

Plus, of course, perhaps above and beyond all this it’s all going to be integrated tightly into the various Adobe toolsets across Creative Cloud, Document Cloud, Experience Cloud and Adobe Express workflows…pretty much everything the company does. This gives it a huge leg up on any competing systems.

No word as yet on how this is all going to be charged, though the main possibilities are either as an additional subscription cost or on the current x credits per generated image model already used elsewhere. But it’s worth pointing out that this is just the start of the Firefly class applications too. The Firefly website is also talking about explorations of 3D modelling and text-based video editing, with a brief visualisation included at the start of the video below of changing a summer video scene into one shot on a winter’s day.

Tags: Production AI

Comments