Ctrl+ Console

Ctrl+ Console

Use a touchscreen as an NLE controller? Actually, it works rather well

I was skeptical about the idea of using a tablet in place of a hardware controllers. I've used dedicated hardware to edit with and there's something very visceral about the one-to-one relationship of the real-world controls to the objects on your screen. Ideally - and this can happen - the whole system becomes invisible, as editing goes into unconscious competence, and your ideas just flow onto the screen.

So, the question is, can this happen with an iPad? What's it like using a controller with no physical controls? I've been using the CTRL+Console app for iPad to find out. And, not wishing to give too much away at this stage, it works, very well. So much so, that I would consider using it every day.

The app comes with virtual control surfaces for Premiere and Final Cut Pro, in both basic and "pro" versions. When you go pro - it's a reasonable $29 - you get a familiar-looking screen based around a jog-shuttle control, with all the main edit functions (Delete, Lift, Overwrite, Insert, Add Edit, Mark, In and Out) arranged concentrically around the periphery.

There are big buttons for Previous Edit and Next Edit, as well as a complete toolbar with ripple, razor tools etc and buttons for selecting between Source and Timeline.

It's easy to set up. Just follow the instructions and the iPad sets up a robust wireless connection with your computer.

I had big reservations about the idea of this simply because touchscreens have no physical references to tell your fingers where to go. And if you have to keep looking at the screen, then you're not editing efficiently.

But within a few minutes of trying it, I was convinced, because I found that my fingers remembered the position of the controls. It takes a little longer to remember all of them, but I found that by feeling the edges of the screen (my iPad has a leather case that also holds it at a comfortable angle) I could always tell where I was.

The controls "feel" good

What's more, the "feel" of the controls is very good. You might expect them to have no feel at all, but in fact, it all seems very natural. The tight coupling between the app's controls and the NLE itself encourages you to use the CTRL+Console app's controls as if they were physical ones.

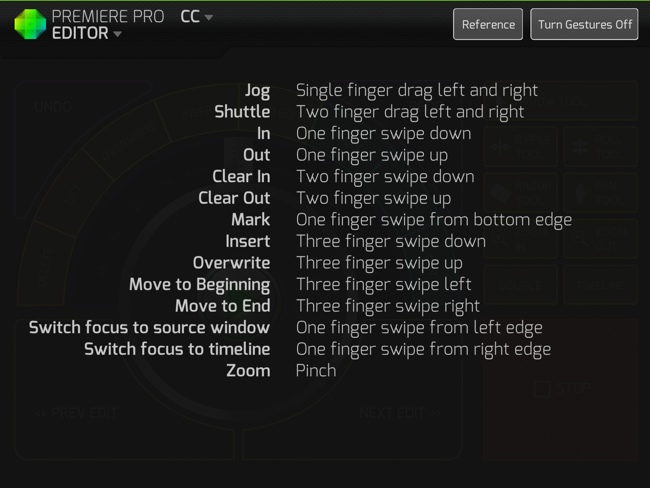

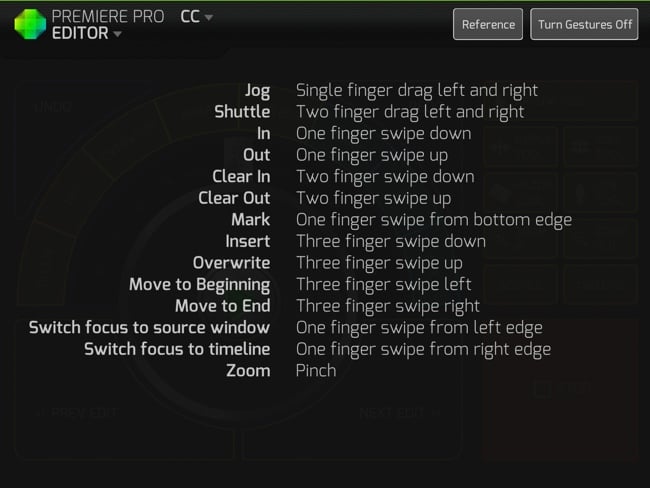

Beyond the "drawn" controls on the screen, there's a comprehensive set of gestures, which speeds things up even more.

One aspect that dawned on me as I was using it was that this is a very good way to "even out" the differences between NLEs. It's like an abstraction layer above the NLE's UI itself. I think this is probably a very good thing.

Personally, I'd recommend using the iPad Mini, because it is just the right size for single-handed operation, and it doesn't take up too much desk space.

I think this is a great use of technology, and definitely something I'd recommend you try.

Available from the Apple App Store here.

The App is $29.95, but you can buy a simplified control interface for $5. This enables co-workers on a project to play material without any danger of changing the edit.

Tags: Post & VFX

Comments