We could have put a big lens flare on this, which would in many ways have been quite appropriate, but you'll just have to take our word that it's important.

We could have put a big lens flare on this, which would in many ways have been quite appropriate, but you'll just have to take our word that it's important.

The ITU has in the end not just recommended one HDR standard, but two. Phil Rhodes on the competing standards that offer contrasting benefits: which one will come out on top?

Betamax was better than VHS. With that ancient truism out of the way, we can get to something that's the subject of the moment in more ways than one: HDR, which seems doomed to a rather similar format war. We can only hope that the best technology wins, or at least that we all have a reasonably similar definition of 'best'. To this end, the Radiocommunication Sector of the Independent Telecommunications Union (called ITU-R for reasons of brevity) has announced its Recommendation BT.2100.

Brought to you by the same people who decided that television greens would in fact be slightly feeble and yellowish, Rec. 2100 is described in the desiccated terminology of international standardisation bodies as "image parameter values for high dynamic range television for use in production and international programme exchange." What it actually does is to codify and standardise two approaches to HDR which are already pretty well known: Dolby's perceptual quantization, and hybrid log-gamma, mainly championed by the BBC and NHK.

HDR tech talk

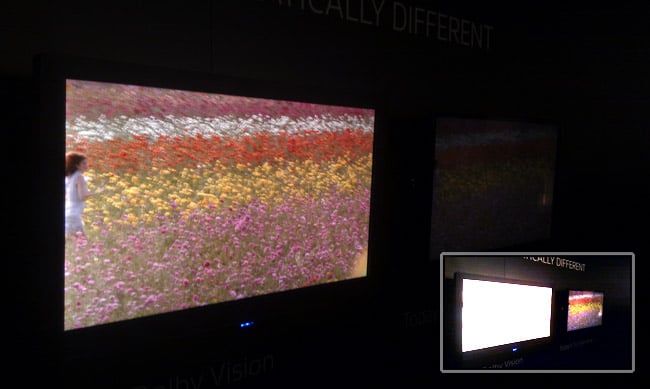

This shot of the Dolby booth at IBC is still probably the best way to demo HDR on normal displays.

This shot of the Dolby booth at IBC is still probably the best way to demo HDR on normal displays.

Inset: what the comparison looks like exposed for the SDR display (right).

Before we dive into a discussion of the specifics of these two things, it's worth defining exactly what their purpose is. Both of them are – deep breath – electro-optical transfer functions, which is a technical way of saying that they define the relationship between an electronic signal level and the amount of light that comes out of a display. Bigger signals provoke brighter light, generally, but the purpose of an EOTF is to define exactly how that works. Strangely, this stuff was never all that well defined in the past. We simply relied on the way that a cathode-ray tube responded to rising voltage inputs with rising brightness, which through blind luck happened to be quite suitable for the way analogue television operated. This had been the reality since the 1930s and, astonishingly, it wasn't standardised until 2011, as ITU-R Recommendation BT.1886. Basically, we're making LCD and OLED displays and electronically modifying the signals sent to them so that they behave like the CRTs of the last nine decades.

Both perceptual quantization (or PQ, as we'll call it) and hybrid log-gamma (HLG, for short) approach this in a way that's suitable for high dynamic range material, defining a range of signal levels that result in much higher maximum brightness and contrast than conventional approaches. Common Rec. 709 monitors, bearing in mind that 709 doesn't actually specify a relationship between signal and brightness and we're using Rec. 1886, interpret the maximum signal level to mean a display brightness of somewhere around 100 nits, or 100 candela per square metre. Most HDR standards accommodate a brightness range up to thousands of nits.

The reason we need to use a different approach for HDR displays is simply that the steps between brightness levels are larger on a higher contrast display, and because current approaches to distributing those digital values across the whole brightness range aren't designed to accommodate very high brightnesses. If we have a (hypothetical) 8-bit display, we can encode up to 256 brightness levels. If the maximum brightness of the display is 256 nits, we might encode one nit per digital value. If we double the maximum brightness of the display to 512 nits, each code value is now two nits apart, which might be a visible step that would prevent us drawing smooth gradients.

The easy approach would be simply to use more bits, but naturally we'd prefer to minimise the need for that by assigning numbers to brightness in a way that the brightness steps between numbers are less visible. Then, we can use a smaller range of numbers and store less data. Existing approaches already do that to some extent, but are largely designed for conventional dynamic range pictures.

HDR vs HDR

Production equipment for HDR, such as Atomos's Flame recorder, is already available.

Production equipment for HDR, such as Atomos's Flame recorder, is already available.

As stated at the head of this article, the new Recommendation BT. 2100 concentrates on two popular approaches: Dolby's Perceptual Quantisation, and the hybrid log-gamma encoding developed by a BBC-NHK collaboration.

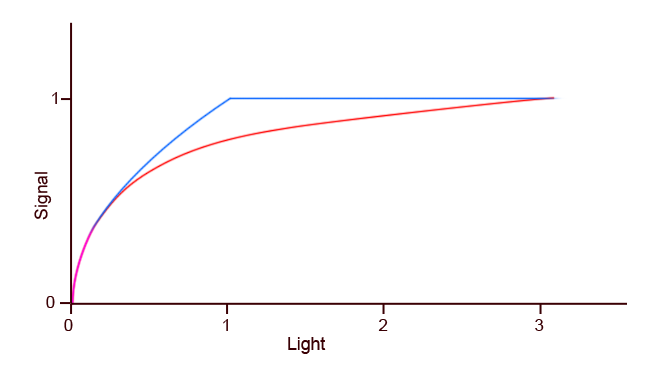

Perceptual quantisation (or PQ as we'll call it) was previously standardised by the SMPTE as ST 2084, and is used as the basis for the well-known Dolby Vision system for displaying HDR on devices of variable capability. PQ itself is based on careful calculations and experiments designed to match the numerical precision with which brightness is stored to the way the human visual system works. Experiments with extremely high brightness images led the designers of PQ to create a setup capable of driving 10,000nit displays with precision that closely follows the behaviour of the human visual system. Particularly, the design is set up to avoid wasting a lot of digital bits on that high brightness range, while retaining it for the sake of future capability. Because no 10,000 nit monitors exist, a true PQ signal would require processing to suit the display, which requires knowledge of the display's capability. This is why there's no PQ curve on the graph below; it isn't a simple code-values-to-light relationship, at least not in theory.

Hybrid log-gamma is in some ways similar, but compromises somewhat on absolute performance to achieve some technical conveniences; critically, it can reasonably be displayed on either high- or standard-dynamic-range displays. This is achieved by using a logarithmic encoding above about 50% signal value (which equates to a bit less than 50% actual light output), and one based on more conventional, 709-style gamma encoding below that point.

HLG (red) versus standard dynamic range (blue).

HLG (red) versus standard dynamic range (blue).

PQ isn't shown because it doesn't have a simple signal-to-light relationship.

The upside is that this looks reasonable on both HDR and SDR displays. The criticism, if you want one, is that it's a compromise too far which works on both, but looks great on neither and, overall, sacrifices too much on the altar of technical convenience. The point is, though, that things which are easier to use are more likely to actually be used and ARIB, a Japanese standards body, has already decided that it's the right way to go. It is no surprise, perhaps, that HLG is the daughter of two broadcasters which have both significant R&D departments and a direct reason to control the costs of distribution.

We have, of course, glossed over Dolby's based Vision standard, which is based on PQ. It is very highly capable and Dolby has shown impressive demonstrations, although the demos have often not really represented the reality that might exist at the consumer end of the chain. It is also complicated and requires licensing from Dolby. This is, after all, the way in which the company has historically made a living, by licensing its cinema exhibition standards. The attraction for Dolby is in collecting a presumably-small fee for every Vision-compliant TV sold. The risk is that there are now two reasons the approach may seem expensive – it's complicated and proprietary, while HLG, in particular, has been promoted as an open standard, which matters lots.

Dolby's Vision standard, promoted to the public here in LA in early 2016, is capable,

Dolby's Vision standard, promoted to the public here in LA in early 2016, is capable,

with 12 bit pictures and variable luminance mapping, but complex.

So, as well as the technical challenges of working with HDR and the currently-swingeing cost of high-end HDR monitoring, we may have another format war on our hands. It's not as easy to plump for an ideal, as it was with VHS. Vision is great, but complicated. HLG is easy, but not nearly as capable. For a while, then, we're likely to have to keep chasing all the rabbits at once, at least until the consumer world has decided what it wants. Camera people can probably just be happy that shooting techniques are, with luck, unlikely to change that much.

Tags: Technology

Comments