Media processing in the browser

Media processing in the browser

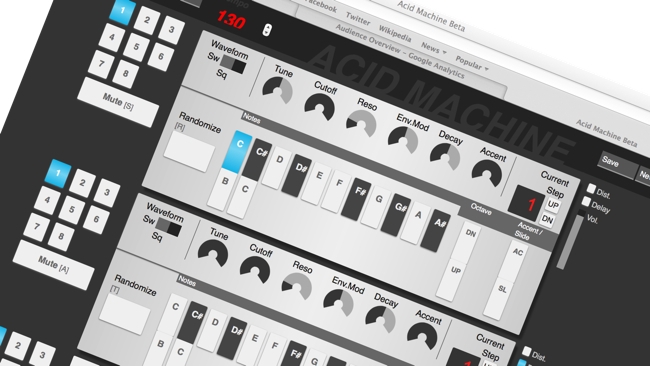

Here's a taste of what's to come: real time processing in a web browser

Perhaps the most important trend in computing and content creation is virtualization. It's a dry-sounding subject that feels like it should be the domain of IT departments and not necessarily filmmakers and musicians. But what's happening now is absolutely transformative.

Remember the Mac vs PC wars? Well, actually they're still going on. But meanwhile, there's something far more important. Because eventually, everything will be completely platform independent and will, effectively, run in a browser.

We're going to have to stop thinking about browsers the way we have in the past. Anyone who was around in the early days of the web will remember that "browser" was a synonym for "clunky and slow". But today, computers are orders of magnitude faster and browser-based programming has reach heights that that were unimaginable just a few years ago. Browsers are now effectively computers in their own right. You can write applications specifically for browsers, and because browsers run on multiple platforms, anything you write for a browser will run on those platforms as well. (What I mean by "platform" is an iOS phone, an Android phone, Macs, Windows PCs, Linux (on anything) and games consoles etc).

There are definitely disadvantages to virtualization. It's slower, for example, than running applications natively. It has to be - because part of the job of whatever hardware host the virtual machine is running on is to support the virtual machine, which, in itself, takes plenty of processing cycles. A virtual machine "pretends" to be a real machine as far as the software is concerned, but the point is that it can pretend to be the same type of machine, whatever type of hardware it is actually running on.

So if, for example, an application says it can run in Chrome, it doesn't need to know what type of machine (within reason - it would probably baulk at a food mixer) Chrome is running on.

Virtualization can't perform miracles - ultimately the capabilities of a virtual machine can't exceed or even closely approach those of the host hardware, but with the rapid increase in processing power (including multiple cores) we are, in certain areas, past the point where it's possible to run demanding real-time applications in a virtual machine.

At the lower end, you can find software like this, which is a basic but fully functional synthesizer and drum machine, written in Javascript, a language which, I suspect, was never intended to facilitate real-time synthesis. Java, which sounds similar to Javascript but which is actually fundamentally different, runs in its own virtual machine which is supported across a very wide range of platforms. Here's a state of the art example of a cloud-based production system written in Java.

At the other end of the scale, Dell is now offering a fully fledged virtualised workstation, complete with GPU acceleration. This is more a way to allocate the resources of real workstations to remote users who can see their applications running on them on their local systems, which don't have to be powerful at all.

In between, you've got virtualized electronic music and recording studios, for example, like Reason. Reason runs on PCs and Macs but is heavily customized to work efficiently on both platforms. This happens at a low level in the application itself but the higher level elements (we don't know exactly which) clearly are transportable to some extent because Thor, the heavy-hitting synth included in Reason, runs on an iPad as well.

Processing is becoming commoditized

Processing power is becoming completely commoditized. The landscape is shifting from expensive, power-hungry, dedicated computers to distributed access to low-cost, low-power processors that are available everywhere at any time. The Internet Of Things will only push this further, and the speed at which this happens depends, I suppose, on how you define "things".

The only way to run powerful programs on these distributed platforms is to virtualize them. Soon, it won't matter that we waste processing power in the virtualization process - that will be more than compensated for by the almost inevitable increases in processing power and by falling costs. We used to worry about writing DSP code (Digital Signal Processing - essentially software that processes "signals" like audio and video) in C++ instead of machine code, because it was inefficient. But it is much more efficient to write like this. You can get more done and programs, although perhaps an order of magnitude slower to run, are much nicer to use because there's more time to pay attention to the UI and usability in general. This might have wasted us a generation or two in processor speed increases, but that happened long enough ago for us not to mind now.

It will be the same thing with virtualization. At some point, all our programs - even our media processing ones - will run on virtual platforms, and we will be better off because of it.

For specialist equipment manufacturers, it will be nirvana, because they can re-use the same code across all their products - even when the update them radically.

Ultimately our applications will follow us around. they'll be in our local hardware, or distributed across multiple distributed processors, or processed in the cloud. We won't even think about this. It will be seamless. Need to change the look of your film, in-camera? Don't worry. Just request it and somewhere - out there - will be a process that can do it for you. Don't worry about bandwidth or processing power - you'll just be able to "assume" it.

Tags: Technology

Comments