Consumer-grade GPUs are getting faster and, crucially, they're using less power

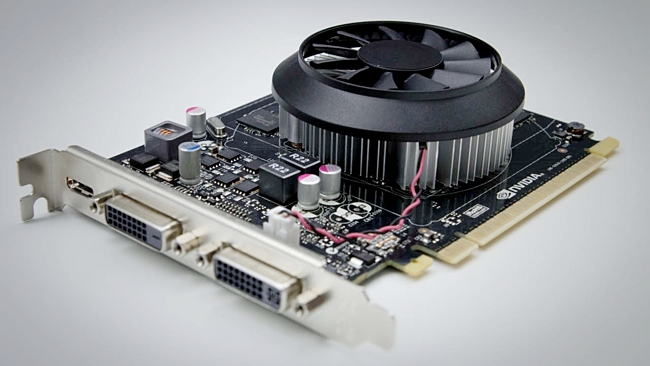

Gamespot has revealed that Nvidia's GTX 750 Ti, which costs around $149, outperforms the Xbox One when playing some games. Of course, you still need to buy a computer it to use with it, but the point is that it's looking a bit dicey for a games console designed to last for seven to ten years if a mid to low-range (in terms of cost) GPU can outperform it within months of release.

But there's more to this story than the questionable longevity of the latest generation of games consoles.

Of course, it's impressive that such low cost cards can perform so well (but see our article on why professional cards are better for professionals), but what also emerges here is that we're starting to see performance quoted not just in terms of "how fast it does this", but "how fast it does this, per watt". In other words, power consumption is becoming important.

Mobility

And that's because we're getting more and more mobile.

There are other reasons as well, including the very good argument that even with a static desktop computer it's good to use less power. But everyone wants mobile; even power users. The awesome new Dell M 3800 (which we're currently reviewing) is a tour-de-force and, although it doesn't exactly sip power when it runs flat out, is a great demonstration of the viability of a mobile workstation. More performance per watt will obviously help this.

Colour correction

And, beyond that, GPUs are doing an increasing number of tasks in film and TV production - not just post production. You can see them in use as raw footage is processed on-set to allow the director and DOP to preview the look of the shot after it has been colour corrected. In future, virtually every step in the workflow will benefit from real-time colour correction, and while much of the time this will be carried out by FPGAs (hardware chips that can be reprogrammed to perform a number of tasks. They're effectively a way of running software at hardware speeds) they can't match the sheer flexibility - and, ultimately, the speed of dedicated GPUs. (I'm sure that last statement is debatable, but FPGAs do not have flexible high-level architectures like Nvidia's Kepler.)