RedShark Timewarp: This article was one of the earliest articles published on RedShark back in 2012, but it still has legs as technologists wrangle with the idea of volumetric video.

If you’re getting bored with the daily diet of 4K, 48FPS and stereoscopic announcements (and I hasten to add that I’m not!), then here’s something that might at raise at least one eyebrow by a degree or two.

It’s Volumetric Video.

Cubic Pixels

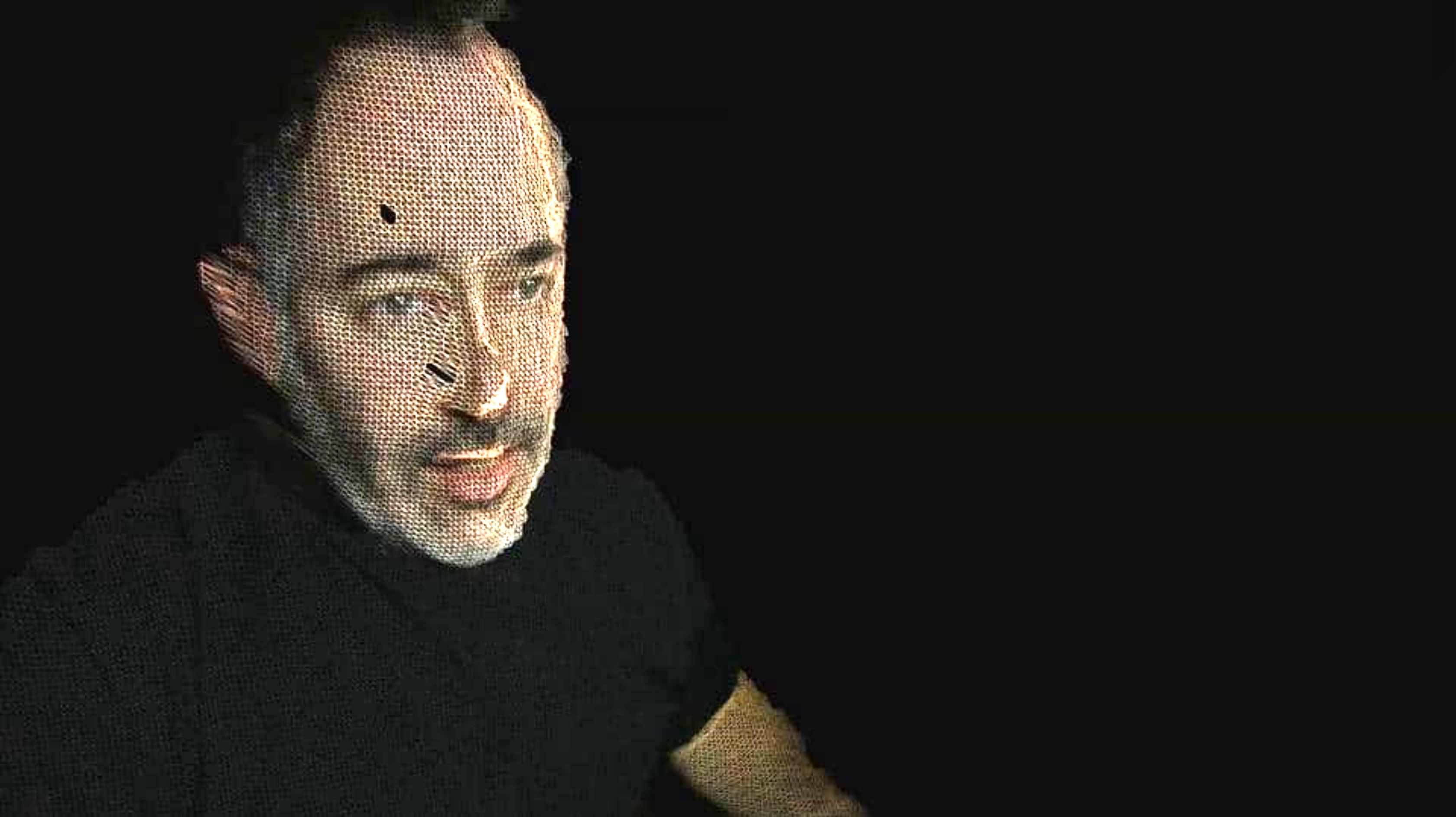

Some researchers have taken a Microsoft Kinect (the thing you plug into your X-Box 360 that allows you to dispense with the controller in video games because you replace it with your own body) and upgraded the resolution of its built-in camera with a pair of DSLRs, with some rather hallucinogenic results.

The Kinect tracks your body’s position in three dimensions, and it does this through a multi-stage process, part of which of which is capturing your posture complete with depth information. Unlike traditional cameras that record an image in two dimensions (x and y) the Kinnect records a third dimension, depth, normally called z.

So every pixel captured by the Kinect has a horizontal, vertical and depth position. It exists within a volume, and not just on a plane.

This is extremely important if you are going to use your body as a games controller, because it allows Kinnect-savvy games to understand your position in three dimensions - but in a different way to stereography. The Kinect creates an actual 3D model of your body in real time. Once it’s captured, you can “fly” around it as if it were a moving statue. (Of course, Kinect can’t see round the back, so the model may have a 3D “shadow” where there are no details at all.)

Getting behind the subject

There have been examples of this on the web now for some time but the results, while fascinating, have been decidedly low-fi, because of the Kinect’s low resolution imaging, but now, with the RGBD Toolkit, developed by the Studio for Creative Inquiry (part of Carnigie Mellon University) you can capture volumetric video in much higher resolution.

As you can see, there’s still a long way to go. But this is something of seismic importance to the Film and TV industry.

Because when this technique is refined (and there’s almost no limit to how refined it can get when you remember this is based on consumer games technology and not industrial-grade studio equipment) we will be able to film any scene, and then change the position of the camera in post production.

Camera position will become just another keyframeable attribute that you can change in the edit. Even the volumetric “shadow” behind the objects in the frame needn’t be a problem: just put another camera round the back and coordinate it with the ones at the front.

Flight of fantasy

Of course, once we exist as real-time high-resolution models, we can dispense with conventional motion capture and apply CGI “textures” to our bodies. We’ll be able to change our clothes, grow hair, and even look like long-dead actors or the native inhabitants of Pandora.

And why limit ourselves to three dimensions? If you embed a time coordinate in each voxel (which would become, I suppose, a “Tixel”), then there’s no need to even think about the order in which events happen, because the tixels themselves would just appear, in the right time, and at the right place.

Update

To bring this article up-to-date and to see where we are with volumetric video right now, watch the TED talk below.

Tags: Technology

Comments