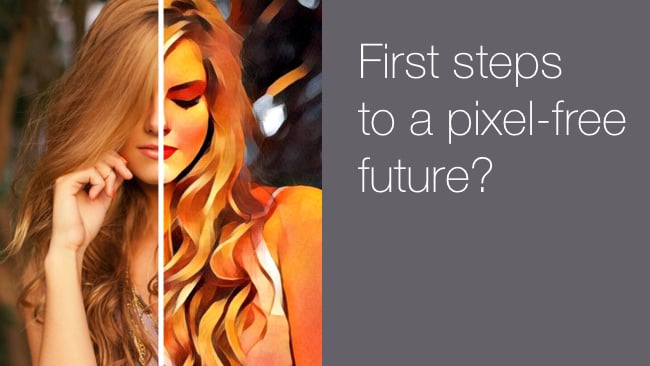

First steps to a pixel-free future?

First steps to a pixel-free future?

It might not be getting quite the amount of attention of Pokemon Go - what is? - but a new app that transforms photos into 'art' in the style of the masters is overloading servers worldwide and could point to the future of vector video.

Unless you've been able to take time out from chasing Pokemons around Central Park, you might not have noticed that another app, Prisma, is breaking its publisher's servers by being unbelievably popular. And the good news is that it's popular because it's good.

Artistic stroke

Prisma will transform photos that you take on your phone into remarkably credible pieces of 'art' in abstract styles or those of well-known artists. It works incredibly well and people love posting pictures of their puppy in the style of Piero Della Francesca or their local KFC fashioned after Kandinski.

How does it work? We don't know. It's pretty clear that there's some intensive work going on inside the Prisma app. It can take 15 seconds on a reasonably fast phone to apply an artistic style.

And yet, there's talk of a video version. That would be awesome and quiet possibly transform video forever. Never mind a colour look; what about an entire film in the style of Manet? Or even Picasso. (If you did that, you'd never notice the macroblock errors).

Video challenges

I'm serious about this. But it wouldn't be easy. For a start, you'd need to speed things up. If it takes 15 seconds to process a frame, that's 375 times real-time. This might not be a problem because a smartphone isn't exactly the fastest computing platform, although with modern GPUs, they're really not bad.

I'm guessing, but to do this in real-time, you would probably need a custom chip design. Or racks of servers. But if you don't need real-time, then you can render, just like normal.

There are other questions. The process clearly works with still images and the results are astonishingly good. But what happens when they start moving? You'd need some sort of intelligence in the software to recognise that stylised shadow on a face that looks like a dark black line is not actually a permanent feature, but a passing lighting artefact.

Ultimately, stylisation requires at least some knowledge of the objects it is being applied to. But object recognition is getting better all the time, so this might not be a big issue, although you can imagine it's always going to need some 'cleaning up' where inaccurate guesses have been made.

Future Vectors

Now, once a picture has been stylised, it has also been quantified. In some ways, it's a bit like turning bitmap image into an Adobe Illustrator file. Once you have all the information you need about the outlines and fills of objects making up an image, then it's possible to describe then mathematically. This type of picture is called a 'Vector' image. These vector images are mathematical descriptions of an image. A vector video would not only have descriptions of what's in a frame, but what happens in a sequence of frames too.

You can read all about vector video in our article here.

The point here is that, even though we're still a very long way away from it, the technology in Prisma could bring us a little bit closer to true vector video.

Tags: Technology

Comments