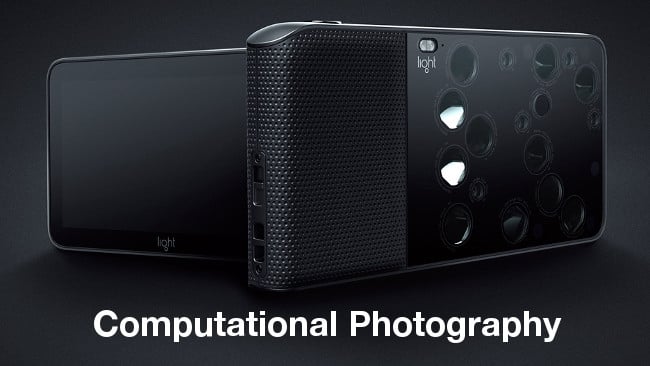

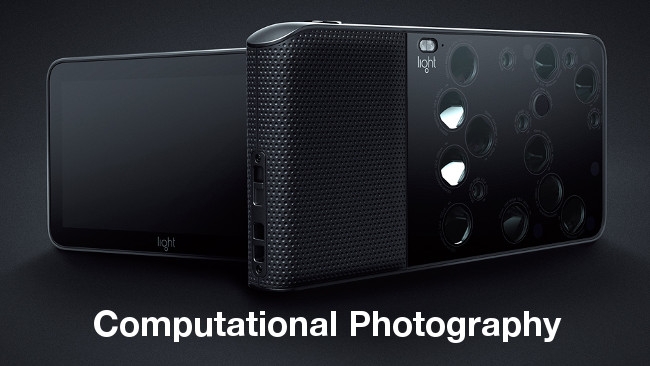

Computational Photography Explained

Computational Photography Explained

A close look at 2015's standout technological breakthrough and its enormous potential to transform cameras, lenses, and, well...almost everything we do.

As Patrick Jong Taylor recently touched on in his article on upcoming technologies, computational photography is an important technique now and is likely to become more so in the immediate future. What's less widely realised is that, given some of the techniques now under development, it's likely to be literally the biggest change in optical imaging in a thousand years.

We're starting to see real-world examples of how computing can make very high quality pictures from potentially quite simple optical arrangements, and how these pictures can be adjusted and improved way beyond what would be possible with any other technique.

The technology as it exists makes several of the things we've spent a lot of time developing almost irrelevant – lenses can be basic, spatial resolution barely matters, focus becomes vastly easier and things like precise camera positioning and stabilisation are issues for postproduction, not the camera department.

Lightfield explained

The term 'lightfield', at least in the context of film and TV imaging, is perhaps best understood by comparison to a conventional camera. For each point on the surface of an object which appears in a scene, a conventional camera captures a reflected ray of light. The ray of light is reflected off the object, focussed onto the sensor and forms part of the image of the object in question. Give or take depth of field, this situation is simple and intuitive, but there are naturally many rays of light being reflected from the surface of the object which don't happen to fall into the lens of the camera. A lightfield recording includes more of the rays of light – generally, all of those which reflect from an object and then pass through a rectangular area of space.

In some ways, this is as simple as saying that we take a lot of small cameras and place them side by side, so we can later choose one of several nearby angles to view the scene from. This is certainly how some current devices, such as Lytro and Fraunhofer's efforts, actually work, although it isn't theoretically a true lightfield. To do that, to produce a true lightfield, we would need to detect all of the incoming rays at every single point in the field, which would require cameras the size of the wavelength of the light. To put it mildly, that isn't technology we have access to. Happily, an array of small cameras close together can capture enough data for computer interpolation to approximate what the scene might look like from any point between them, so we can have access to many of the advantages of lightfield capture without requiring cameras made out of the very finest, highly-refined unobtainium to shoot with.

Amazing potential

The possibilities are almost endless: most obviously, we can choose any camera position within the array, with true perspective shifting, not just sliding an image around. This means a lightfield can produce both eyes of a stereoscopic image. Also, by compiling together images from various very slightly different virtual camera positions distributed within a small circle, we can produce an accurate simulation of the effects of various aperture sizes – or, simply put, variable depth of field. By detecting the offset between parts of the image as viewed by different cameras, we can estimate the depth of the object within the scene and thereby create a three-dimensional model of the scene, which can be used in visual effects or grading work. This latter opportunity – the ability to perform postproduction re-lighting with correct three-dimensional data – is an enormous advancement on its own, although we can expect to see some nervous directors of photography.

Practical implementations of lightfields may include one high-grade camera, generally in the centre of the array, to produce the image that the audience will see, with others around it to produce the lightfield data. There are naturally problems and many of them are the same problems that may afflict stereoscopy – mismatched lenses and mechanical lineup, lens flares and very nearby objects that appear vastly different as seen by different cameras. Ultimately, though, lightfields may finally present us with a use for the ever-improving technology of sensors. With higher and higher resolution becoming more and more difficult to justify or sell to anyone, the ability to make good-quality, high resolution, physically compact sensors with good dynamic range, to form part of lightfield arrays, is likely to become more and more important.

The ideas of ancient China, where the camera obscura was first known, have been the basis for optical imaging for more than a thousand years. People then projected images using lenses inside a dark room (the word 'camera', famously, simply means 'room' or 'chamber'). We're still using the same techniques. We're still making lenses and sensors better, sharper and more sensitive. Should lightfield technology catch on, it will finally, more than a millennium later, genuinely change a thousand-year-old game.

Tags: Technology

Comments