Free D

Free D

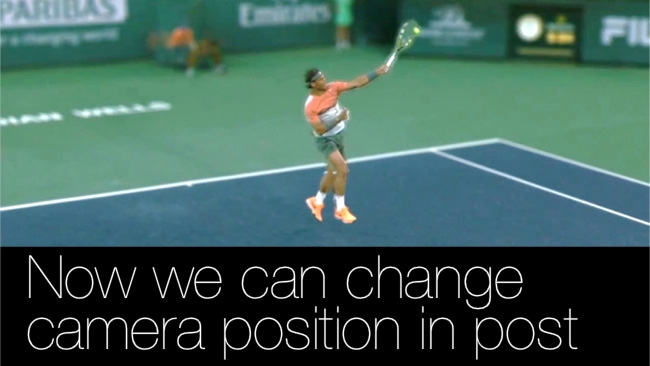

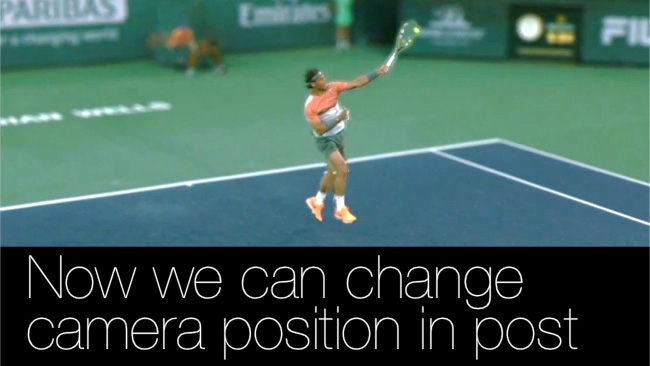

This is the best demonstration yet of something we've been predicting for ages: the ability to see your production set from an angle where there's no real camera. This is a sports-based demonstration but, mark our words, you'll be seeing this on a cinema set within the next few years

We've seen hints of it for a while now, in Google Maps, Microsoft's Photosynth, and even in the Matrix. What we're talking about here is the ability to film an event from multiple angles, and then extrapolate between these camera positions to create a credible image from a perspective where there was no camera.

I've been speculating about this for a long time. In 2005 I wrote in Newsreel Magazine (an offshoot of the much missed Showreel Magazine):

"...it may just be possible to arrange a complete array of cameras, suitably spaced, so that by analysing and concatenating the combined output it would be possible to generate a speculative “virtual” viewpoint."

Now there's a company that is not only claiming to have done this but actually have a product in use. Here's an example of their technology, so that you can see what we mean.

Made it work

Replay Technologies has made this work. As you can see, they are able to move a "virtual" camera around the entire stadium, so that you can "fly around" tennis players and see them from any angle. At present it's a still image - like a "bullet time" scene in the Matrix, but there's no reason apart from sheer demand for computing power, why this shouldn't work in full motion video.

The company themselves aren't immodest about the future direction of their products, but they're right in the immensity of their claims, although you have to wonder whether evidence "obtained" from a virtual camera will ever be acceptable!:

"We aim to disrupt the fundamental operating cost structure for broadcasting, cinema and other fields (biomedical, security, TV viewing, etc.) by implementation of the concept of placing viewing angles and cameras where none existed in reality"

This really is the start of the ability to specify your camera position in post. This is massive. It's as big as the jump from still to moving images.

Imagine the cost savings if you can "fly" a virtual camera round a real film set, just like you can in a GCI animation. You won't have to put cameras on cranes, and your moves can go anywhere, in any direction, as smoothly as you could possibly hope for.

Explanation

How does it work? Here's the company's own explanation:

"Up until now, video, broadcasting, and film has consisted of cameras capturing two-dimensional image data, which is essentially a sequence of changing flat “pixels” that represent reality. These images are then processed by either post-production facilities, or by ever-growing consumer applications, and end up transmitted and shared digitally.

Our technology works by capturing reality not as just a two-dimensional representation, but as a true three-dimensional scene, comprised of three-dimensional “pixels” that faithfully represent the fine details of the scene. This information is stored as a freeD™ database, which can then be tapped to produce (render) any desired viewing angle from the detailed information.

This enables a far superior way of capturing reality, which allows breaking free from the constraints of where a physical camera with a particular lens had been placed, allowing a freedom of viewing which has endless possibilities.

freeD™ allows producers and directors to create “impossible” camera views of a given moment in time as seen in Yankee Baseball YES View, a tremendous freeD™ innovation. As display technologies get better and more advanced, freeD™ will allow the user to get fully and interactively immersed in the content."

Cameras

And in case you were wondering about their cameras:

"We are currently using cutting-edge technology with Teledyne Dalsa Falcon 2 cameras.

Each camera has a full resolution of 12 Mega Pixels. We crop down 12MP down to 9.3MP which is the equivalent of 4K Technology. All cameras are synced and are shooting the exact same play at the same time. The data from the cameras flows down via fiber extenders (made by Thinklogical) to a distance of up to 10KM."

Don't be fooled into thinking that this is just some esoteric sports analysis system. There is some very important stuff here. If and when we are truly able to place a virtual camera anywhere on the set, and when the results are indistinguishable from how they would appear if coming from a "real" camera, then the production industry will have been truely transformed.

That so much can be done already is remarkable, but there are a few hurdles to jump before the next stage.

Not perfect - yet!

First, the images aren't perfect. You can see that when the system is in Virtual Camera mode, the characters have a somewhat model-like appearance. They don't look real - as in "flesh and blood". There's almost a tilt-shift effect. That's not an issue for sports analysis, but it would be for making feature films.

Second, you can see changes in the image as the system interpolates between real camera positions. This is not at all surprising because it's a lot to ask. If there was no real camera at that position, then all the elements of the interpolated image have to be derived from the other camera positions. What you see here is remarkable, but it would have to be improved for general film production.

But all of this is based on orders of magnitude. It might be that we need ten, a hundred or a thousand times more computing power to get an acceptable result. But that's not a problem. Just wait eight or ten years or so.

And a decade or less is a very short time to prepare for a total change in the production industry. We need to start thinking about this stuff right now. It is going to affect us, and we will need new production skills in using it.

And think about what we'll be seeing prototypes of in ten years time!

Thanks to Jared Hinde for bringing this to our attention!

More examples after the break

Tags: Technology

Comments