Moiré is an often discussed subject when it comes to cameras, but what really causes it, and how can it be dealt with?

This is one of those articles where you need to be viewing this web page at 100% scale. If the block below doesn't look like a completely even grey pattern, zoom in or out until it does.

If this block doesn't look roughly like a mid-grey square, adjust your settings

Most people know what a moiré pattern is. It’s that strange moving pattern of stripes that sometimes appears when moving past two nearby fences with lots of vertical poles. Cameras, too, are often accused – if that's the right word – of having moiré problems, but it isn't necessarily clear how those stripes in fences relate to the coloured ripples that can appear in some cameras. What's going on?

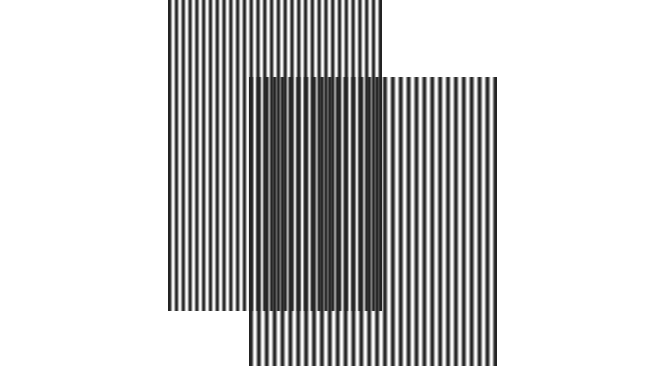

To get a better handle on this, let's consider what happens in that classic case of two fences. Since one of them is a bit further away than the other, it looks smaller, and the verticals seem closer together. Overlay them one on top of the other and the difference in apparent spacing creates a striped effect. This is a trick that's been used quite a bit to produce simple animated effects in books, where we can slide a striped transparent sheet over printed stripes to create moving wave effects.

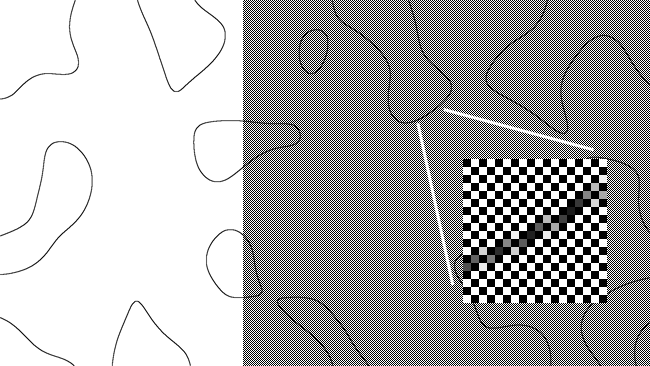

The lower pattern has slightly wider spacing than the upper; the interaction between the two creates an interference pattern

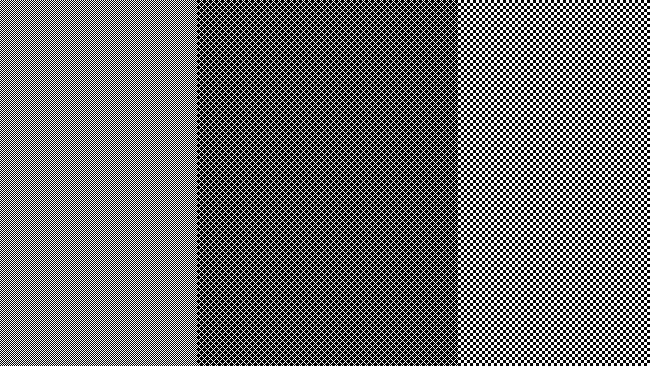

This sort of thing works in two dimensions as well. We can demonstrate this with two grids of squares in a checkerboard pattern. The smallest one has one-pixel squares; the larger one has three-pixel squares. The result is something that's difficult to predict – we get a pattern of diamonds or diagonal squares, if you like (the fact that these diagonal squares also seem to be three by three pixels is a coincidence – since they're on the diagonal, they're not the same size as either of the checkerboard patterns.)

Two superimposed checkerboard patterns also create moiré patterns

The relationship of this synthetic example to a camera's sensor, with its regular grid of pixels, is pretty obvious. The sensor only has one grid, of course, until we shine a light on it that sort-of approximates another grid, or at least has some fine detail in it. Even if we simply overlay our one-pixel checkerboard pattern over some curved lines, we start to see an angularity and grittiness in what was previously a smooth curve.

Moiré is a product of aliasing. Superimposing the checkerboard pattern over a set of curves reveals aliasing

At this point, we need to take a moment to define a subtle difference. What we're really seeing there is aliasing, the fact that the width of the curved lines is close to the size of the pixels. Almost no matter how we define the relationship between aliasing and moiré, someone will argue. However, it's fairly safe to work on the basis that moiré is the result of aliasing in visual images. Lots of images can have aliasing, but patterns are only formed where the glitches caused by aliasing happen to line up and form patterns. This is most likely to happen when a fairly regular, repeating pattern is landing on the sensor. Brick walls are a classic example, although anything with a repeating pattern can cause it. A mathematician, by the way, would consider this a somewhat periodic signal.

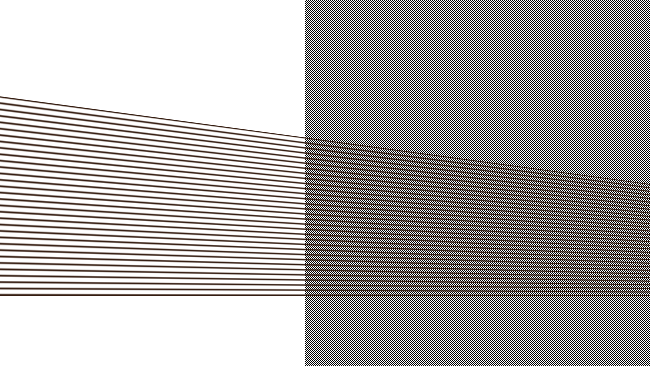

Converging horizontal lines alias in the same way - but the result also creates curved moiré patterns, because of the regular spacing of the lines

How do we solve this problem? Easy. Blur out the image.

Low pass filtering

That mathematician would call this low-pass filtering, and camera people might be familiar with the existence of an optical low-pass filter in some (not all) digital cinematography cameras. All that filter does is blur the image a controlled and constant amount. If your camera doesn't have a low-pass filter, you can use external filters or even arrange for the object that's causing the moiré patterning to be slightly out of focus. The optical low-pass filter simply ensures that everything is slightly blurred, by the same amount, regardless of how far away from the camera it is.

Doesn't that cause a loss of resolution? Why yes, it does, and if we filter sufficiently to remove all aliasing, it causes quite a big loss of resolution, more than many people would be happy to put up with. Notice that the test image above isn't quite perfect, for this reason. Optical low-pass filtering on modern cameras is always a compromise between visible aliasing and the resulting moiré, and sharpness.

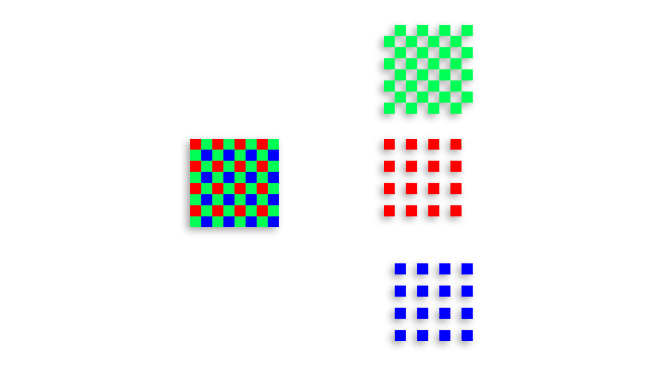

Hang on, though. Why are we using a checkerboard image to demonstrate this? Don't cameras usually have sensors that don't have alternating pixels missing?

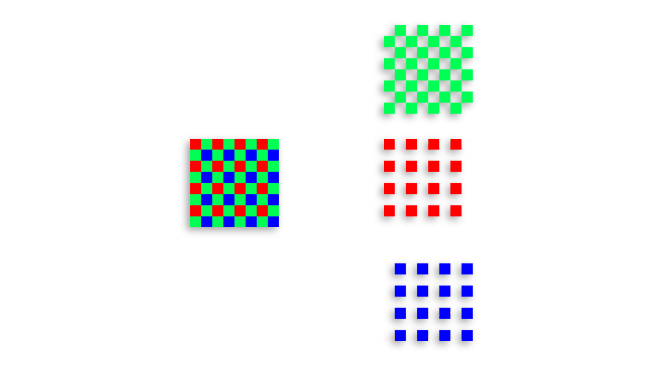

Well, yes, and no. The grid of pixels on a camera's sensor is continuous, but in a single-chip colour camera, there's a pattern of red, green and blue filters on the front. We can consider these as three virtual sensors, each of which has a lot of holes in it.

A single-chip colour camera has red, green and blue filters on its sensor. This results in different spacing for the red and blue filters as compared to the green

This starts to explain why moiré patterns on some cameras end up coloured. The red, green and blue pixels aren't all in the same place, so they create different aliasing and the resulting moiré patterns end up in different places. A difference between the red, green and blue channels creates coloured patterns. Various camera manufacturers use various different clever mathematics to work with the considerations intrinsic to single-sensor colour cameras, but there are some problems that are very difficult to solve. Chief among them is that the spacing between the coloured filters is much larger on the red and blue channels than on the green channel, so designing a reasonable optical low-pass filter is very difficult. The blue and green channels have huge gaps between their pixels, requiring a stronger filter than the green channel – but if we filter the whole chip that strongly, the loss of resolution will be too much. It's a tricky compromise.

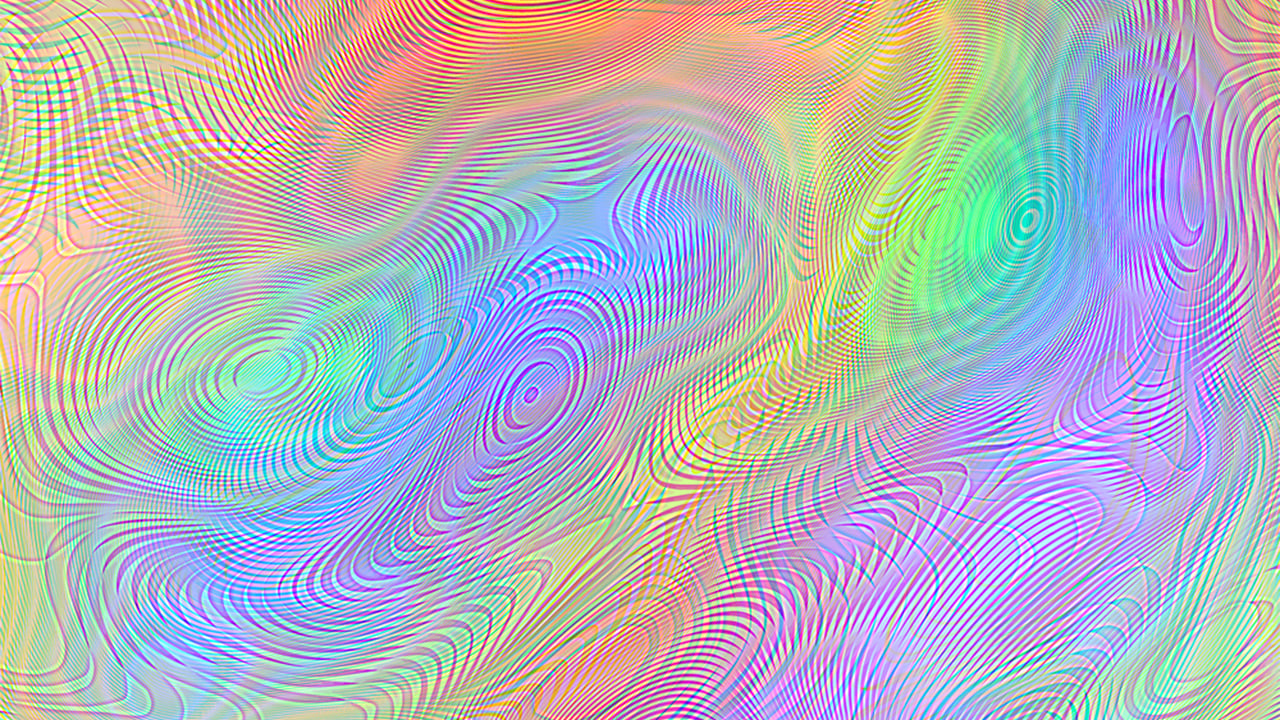

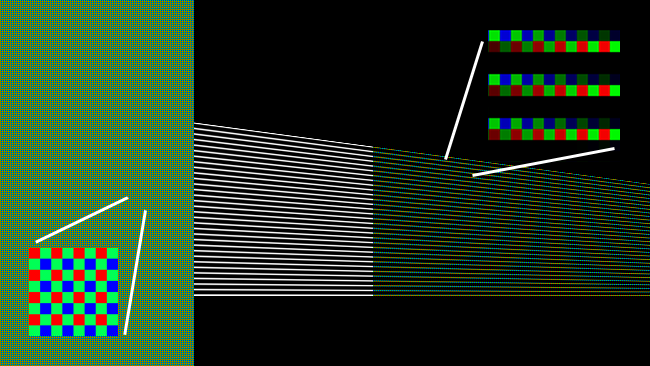

Moiré patterns

Now that we understand what's going on, let's look at an actual example of coloured moiré patterns. What we're looking at here is the colour pattern of the sensor on the left and the image of almost horizontal lines that falls on the sensor in the middle. On the right, we're seeing the colour pattern of the sensor with the lines overlaid on it. Because the lines are only a couple of pixels high, and because they are on a slight tilt, they alternately reveal the red and green sensor sites, which look yellow, then the blue and green sensor sites, which look cyan.

At the left, we see the pattern of colour filters on the sensor. In the middle, we see the converging lines the camera is imaging. At right, the result (without antialiasing or postprocessing)

This isn't a representation of many real cameras, because it doesn't involve any antialiasing, nor the softening of real-world lenses, and it doesn't simulate any post processing. Rather, this is an example of the information the camera might get from the sensor – somehow, it's supposed to work out that those lines are supposed to be white, as opposed to alternating cyan and yellow. The fact that antialiasing and post processing are never perfect means that quite a lot of cameras will do this at least a tiny bit when we shoot exactly the right sort of subject. Knowing this can help us avoid troublesome subjects.

Or, if we're really backed into a corner, find ourselves with a perfect excuse to shoot something very, very slightly out of focus. If we think we can get away with it.

Title image courtesy of Shutterstock.

Tags: Production

Comments