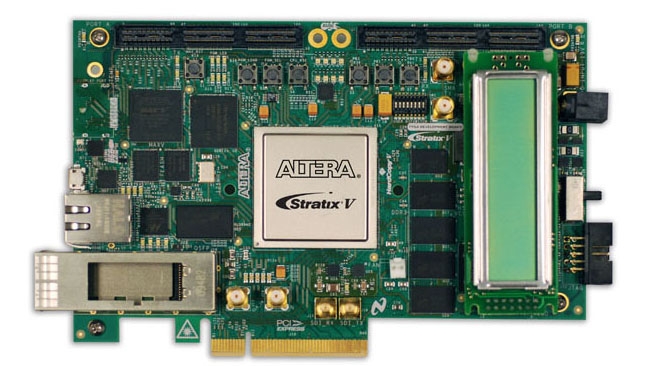

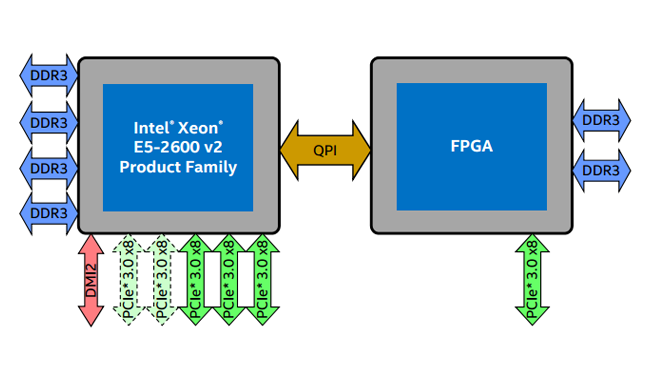

This Altera Stratix V FPGA is on a PCIe card, but Intel's new parts will put one alongside the CPU.

This Altera Stratix V FPGA is on a PCIe card, but Intel's new parts will put one alongside the CPU.

Intel's purchase of Altera sets the stage for CPUs that come equipped with their own field programmable gate arrays. And that could change a lot of things.

When Intel paid US$16.7 billion for Altera, it was clear that they had some specific ideas about what the acquisition might bring them. Altera is, perhaps primarily, a manufacturer of field programmable gate arrays (FPGAs), the programmable logic devices which are used to create high-performance digital devices (when there isn't a large enough market to have a semiconductor foundry make custom chips). In film and television, they're used in things like PCIe SDI input-output cards, format converters and cameras – the principal difference between Sony and Blackmagic's output, for instance, is the fact that the latter builds its cameras around FPGAs.

"Parallel yet very different"

The combination of Altera with Intel was therefore interesting because the two companies did, and do, parallel yet very different things. Intel gives us CPUs with a list of functions (addition, multiplication, division, subtraction – there's a couple of hundred permutations) and we write software that expresses what we want to do in terms of the things the CPU can do. FPGAs are different – they consist, at least in theory, of a huge number of logic devices which can be connected together in complex ways using firmware that can be loaded at any time. Thus, if we happen to want a processing device that does, say, nothing but a specific type of long division on nine bit numbers, we can build FPGA firmware that will do that, and only that, at very high speed.

A conventional CPU can do that as well, but it would generally need to bump the nine-bit value up to 16, the next-largest multiple of eight, and use one of its general-purpose division operations. Then, crucially, if we wanted the FPGA to do something different on the following day or the following second, we'd only have to ask it to load some new firmware.

On the same chip

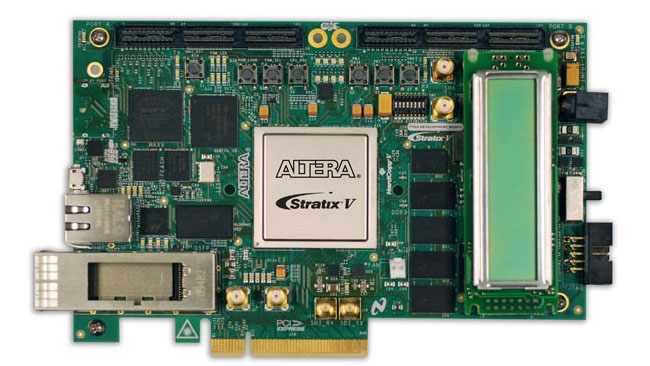

It's been possible to put an FPGA on a plug-in card and connect it to a computer for some time and that's been done in fields such as finance, where the algorithms for dubious practices, such as high-frequency trading (reckoned to contribute to volatility in the securities markets, as in May 2010), can be run very quickly. What Intel has been proposing to do (and is due to release around now) is to put the FPGA and a more conventional Xeon CPU core on the same device, as a plug-in replacement for existing high-end CPUs. The advantage of this is that the CPU and the FPGA, depending on exactly how the architecture of the combined devices is designed, can communicate more directly and with lower latency. Specifics depend on small details of the implementation, but in any case there will necessarily be a driver layer, presumably published by Intel, to allow software engineers to take advantage of the CPU's new friend in the same way they would a GPU.

The FPGA and CPU might talk via the speedy QuickPath Interconnect, which usually talks to other CPUs and the IO hub.

The FPGA and CPU might talk via the speedy QuickPath Interconnect, which usually talks to other CPUs and the IO hub.

Graphic credit: Intel

Intel's intention appears to be that this technology will first be deployed in 'datacenters', those obscure warehouses full of nineteen-inch racks and network cabling where the internet really seems to live. It seems likely, though, that inquiring minds in many industries will find ways to make these new developments useful. Presumably, as ever, the early adopter prices will be horrendous and there's only talk of releasing them to specific large clients in first instance, but it doesn't seem likely that Intel would have paid billions of dollars just to keep everyone else out of the picture. The idea of having every After Effects filter have its own custom hardware implementation is deeply appealing, although that sort of application is clearly years away just given the inertia of plugin vendors.

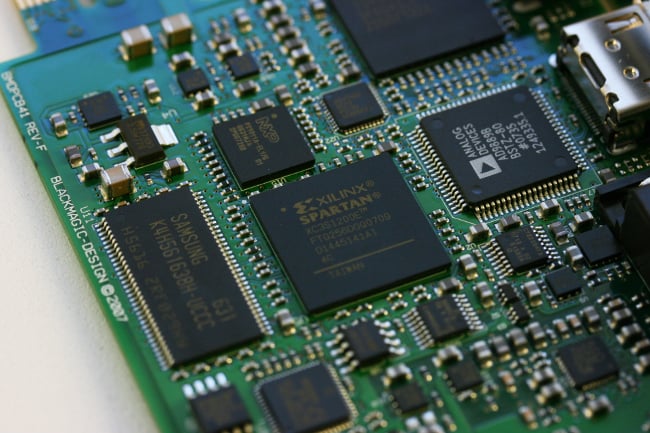

This Blackmagic Intensity is a more conventional application of FPGAs (here a Xilinx part is visible).

This Blackmagic Intensity is a more conventional application of FPGAs (here a Xilinx part is visible).

All of this, of course, is a very good thing. As we discussed a few weeks ago, there are some serious performance concerns over the state of conventional CPU technology. Even if there weren't serious concerns over the achievability of more clock speed (which there are) and even if there weren't problems with the development tools for really good multi-core programming (which there are), there's the continuing issue that the bulk of the market is in smartphones.

These low-power-consumption platforms have driven an unprecedented increase in performance per watt, but not so much in absolute performance. Access to a large, capable FPGA might go some way to offsetting the stagnation of desktop CPU performance in general and, even if only for specific tasks, that's better than nothing.

Tags: Technology

Comments