Another chance to read why you should seriously consider 4K acquisition if you're ending in high def.

It's becoming increasingly normal for the equipment we use to work at resolutions significantly beyond HD. Actual distribution of 4K pictures is currently in its infancy. However, since 4K cameras produce especially nice HD results when downscaled, it's perhaps worth discussing some of the benefits of shooting 4K for an HD finish.

Consider sharpness

Perhaps most obviously, sharpness is a factor. Pictures acquired from an imaging sensor that doesn't significantly outresolve the output image will never entirely satisfy that image's Nyquist limits on resolution. That is, even if we consider a monochrome imaging sensor of, for instance, 1920 by 1080 pixels, the requirement for low-pass filtering to overcome aliasing means that the results will be less sharp than an ideal HD frame could theoretically contain.

This becomes even more the case for single-chip colour cameras. With their Bayer or Bayer-like multicoloured filters, these cameras require even more aggressive low-pass filtering (that is, blurring) to prevent aliasing in the widely-spaced blue and red elements, and suffer yet more imprecision and loss of resolving power, especially of colour-saturated subjects, when the necessary interpolation is performed to resolve a full-colour image. A 4K monochrome sensor can't ideally fill a 4K image; a 4K Bayer sensor even less so.

More or less, the only camera which is routinely configured such that its imaging sensor has much greater resolution than its output frame is the Sony F65, although many other cameras, such as the Alexa, can be configured such that at least some degree of extra resolution is available and the resulting output image looks that much better. It is a notorious failing of DSLRs such as the Canon 5K Mk. II (and even its more recent sibling, the Mk. III) that they don't sample the entire imaging sensor and then carefully downscale the result, which would avoid aliasing and produce images of great sharpness, low noise, and overall high quality. The degree of oversampling, the practice of recording more than the required information and averaging it to remove errors, would be enormous when considering the ten-plus megapixel imager of a DSLR against the two-megapixel HD frame.

Better colour precision

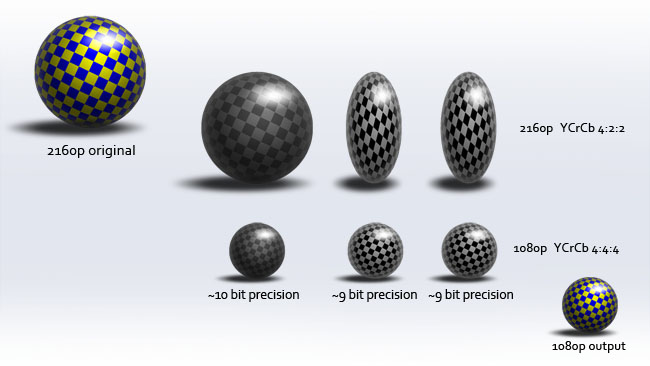

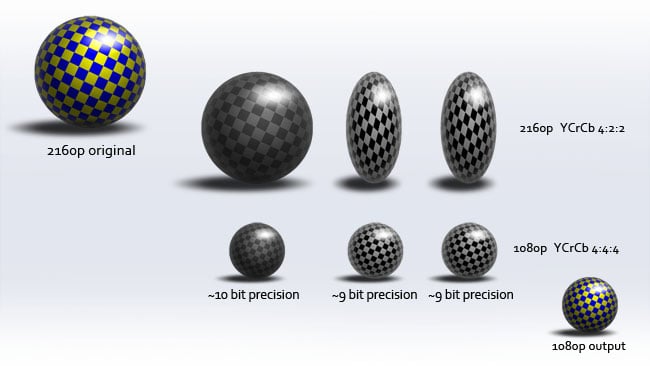

Besides sharpness, there are other advantages of shooting 4K for an HD finish. Many file formats and interconnects, such as HDMI, are 8-bit, meaning that each of the three components (be that RGB or a variant of YUV) is represented with only 256 digital code values. All but the lowest-end film and television work is now done with systems providing ten bits, or 1024 levels per channel, of precision. But at least some of this precision can be recovered by recording a 4K (or, let's say, Quad HD) frame and downscaling it. There are then four QHD pixels for each HD pixel, meaning that some number of QHD pixels may be averaged (and in fact must be, to avoid aliasing in the output image) to produce a single output pixel of greater luminance precision. The further fact that the sum of four 8-bit pixel values must be within the range of a single 10-bit pixel value, since 4 x 256 = 1024, is a coincidence and a technical convenience; we could as well average four 10-bit pixels and store the result in 16 bits, at some loss of potential precision.

Whether a 10-bit 1920 by 1080 frame derived from an 8-bit 3840 by 2160 frame can genuinely be said to contain accurate 10-bit data is something people will argue about, especially since most 8-bit QHD frames will not contain a linear representation of luminance, instead having been processed with a gamma function such as that defined in Rec. 709. This complicates any consideration of the matter as in terms of the formal information theory, and there are also concerns over the fact that many such 8-bit frames, perhaps such as those recorded to SD cards by Sony NEX-series cameras, will also have reasonably harsh compression. It's still reasonable, though, to expect considerably improved colour precision as a result of oversampling and downscaling larger, lower-bit-depth frames into a smaller, higher-bit-depth frame.

Overcoming Subsampling

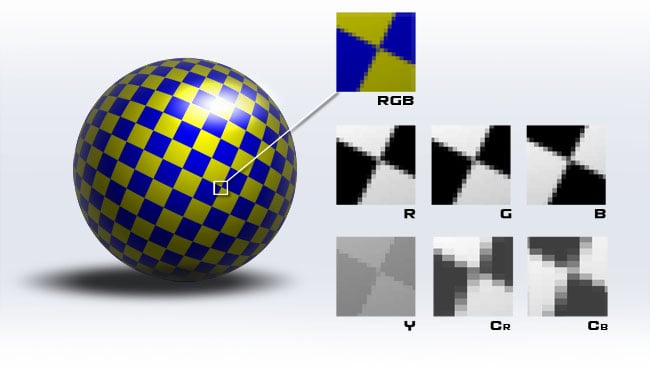

There are other advantages in terms of colour precision. Many 8-bit images will, as a purely practical matter, find themselves represented as some variant on what's generally referred to as YUV data, wherein the Y channel represents a black-and-white image, and the U and V channels represent the colour difference between that greyscale and the red and blue RGB channels, respectively. The usefulness of this, for those who haven't encountered it before, is that the human eye sees detail most acutely in terms of brightness, perceiving colour information at lower effective resolution. The ratios often used in relation to this approach to storing images, 4:2:2, 4:1:1 etc, refer to a reduction in resolution of the U and V channels, a technique referred to as subsampling. Higher-end broadcast formats generally use 4:2:2 subsampling, with the U and V channels stored at half the horizontal resolution of the greyscale Y channel, reducing the space requirements to store the image by a third without obvious penalties to image quality.

This approach works well for distribution and for acquisition of material which will not be heavily processed in post production. In fact, with low compression ratios, it tends to work reasonably well for many applications. It can begin to fail when heavy grading or techniques such as chromakey are used, where the image is processed by something that isn't a human eye and which can see fine detail in colour information. Downscaling a 4K or QHD frame to HD, however, can clearly yield U and V channels without subsampling, permitting a more complete conversion to an un-subsampled result - perhaps an RGB image with identical colour and brightness resolution. There are caveats: converting between YUV and RGB data is intrinsically slighty lossy, since there are colours that either system can represent that the other can't, and it's difficult, mathematically, to claim both the un-subsampled benefit and the 10-bit-accuracy benefit simultaneously; the benefit differs for the luminance and chrominance information in this case. But, again, in practice, at least some benefit will exist.

Wait, there's more...

There are other advantages to oversampling. Noise is reduced (on linear data) ideally by an amount representing more than a stop of either additional sensitivity or additional highlight range. On set, a focus puller can refer to a 4K display in the confidence that the display is better than the final result will be. Reframing or stabilisation can be performed in postproduction without compromising image quality. And of course, a production finished in HD now might conceivably be remastered in 4K in the future. So, even if there is only a limited market for 4K images at the moment, it can still make a lot of sense to acquire them.

Tags: Production

Comments