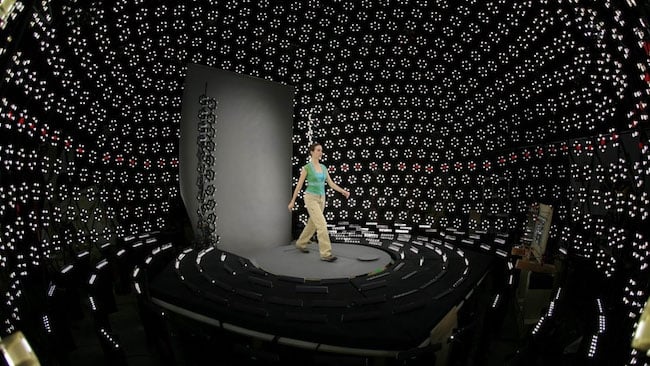

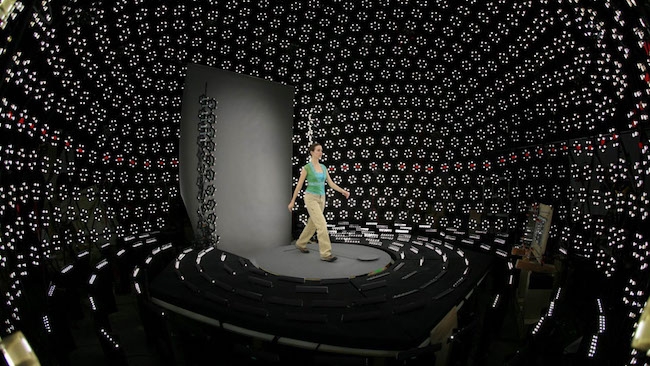

An original Light Stage

An original Light Stage

All the world's a (lighted) stage. With the growing interest in computational photography, might we one day see entire movie studios fitted out with a version of Paul Debevec's Oscar-winning Light Stage idea?

The original papers on Light Stage were released at SIGGRAPH in 2000, and Debevec's clever lighting technique is probably the most prominent and well-developed example of computational photography. It's designed to allow a subject – just a human being, usually, because of physical size restrictions – to be photographed performing any required action, then relit in post to create any desired lighting environment.

Particularly, images of a real environment have been used as a way of defining that lighting environment, so material shot on a stage can be matched with high precision to a real world environment. This involves the common technique of photographing a chrome sphere, capturing a 360-degree spherical image of the surroundings which may be used in visual effects, especially 3D rendering, to create accurate reflections and lighting.

Until Debevec's work, though, there was no very practical way of lighting a real-world object in this way (though compare the work done for Gravity, in which actors performed inside boxes made of LED video displays, to approximate the technique).

The approach used for Light Stage, though, is deceptively simple. If we take a photograph of an object being lit from the right, in an otherwise blacked-out room, and combine that in Photoshop with a photograph of the same object lit from the left, the result looks exactly as the object would with both lights on at once. If we tint the image representing the left-hand light blue, then it looks like the light on the left was blue, while the one on the right remains white. Expand that idea, using hundreds of light sources distributed around a sphere and taking hundreds of reference photos, then any lighting environment can be simulated by combining them together in various proportions and with various colour processing. There are limits on resolution because there is a practical limit to how many light sources can be packed into the available space, but the technique is more than capable of simulating a lighting environment convincing enough for filmmaking.

That works nicely for still objects, but if the subject is moving – as with a person – there's a problem. We need to capture hundreds of lighting references per finished film frame. But if the subject moves in-between the individual exposures, when we combine the different lighting references later there will be fringing, blurring or strange-looking double exposure effects.

The original Light Stage – and remember this was fifteen years ago – first took the direct approach of using the fastest-available high speed cameras, to minimise the difference in subject position between reference frames. Then, because that doesn't entirely solve the problem, it applied motion compensation, the technique used to slow footage down without jerkiness, to correct the apparent position of the subject in each lighting reference to remove the motion, and to calculate motion blur back into the finished composite.

Light Stage was a research project, but was sufficiently usable to have been deployed on several films, from Spider-Man 2 to Avatar. The sophistication is pretty impressive: because the precision of the simulated lighting solution is limited by the number of different lighting references captured, it's desirable to capture a large number of them. Simultaneously, though, we need to capture all of the lighting references at least once for every frame of final output. If final output is at 24 frames per second, we must shoot one frame of every possible lighting angle every twenty-fourth of a second. Practical applications found that the motion compensation was actually good enough to shoot every lighting reference only once every twelfth of a second – simulating twelve frames per second total capture rate – and then interpolate the results back to normal rates in post processing.

More advanced tricks, such as comparing the apparent colour of the illuminated object under light at different angles, allows software to work out the angle at which the object is oriented to the light and the camera. This in turn allows reflectivity to be simulated, so it's at last possible to shoot an actor and render an image in which he or she is made of chrome, or, with a partial effect, simply looks slightly, well, sweaty.

The downsides are fairly clear – the equipment is cumbersome, and while it would be an entertaining project to fit out an entire sound stage with spherical coverage of LED strobe lights to enable the technique to be used on a large scale, there are concerns about the amount of coverage that could be achieved with a reasonable amount of strobe lighting and a reasonable capture frame rate. Regardless, Lightstage is a great example of what computational photography is: the technique of recording a lot of data (often images) that describes a scene, then processing that data to produce the final result.

Tags: Post & VFX

Comments